Last week, U.S. copyright policy took two big swings—one toward more clarity, the other straight into chaos.

On Friday, the Copyright Office released its most detailed take yet on AI training and fair use—the legal gray area that sometimes lets AI companies train on copyrighted content without permission.

On Saturday, President Trump fired the person who led that report.

Here’s what actually matters and what it means for creators, publishers, and anyone building with AI.

The Report in Plain English 🗂️

The main question: Can AI companies train on copyrighted work without permission?

Short answer: Maybe—but only if all four fair use factors line up.

Here’s how the Office frames them:

Is the use “transformative”?

Training models on existing content can be fair if the goal is for the AI to learn new skills or patterns (like writing style or grammar)—not memorize and reproduce original work.What kind of work was used?

Factual or public content (like news articles) = lower legal risk

Unpublished, or highly creative work (like fiction or screenplays) = higher legal riskHow much was copied?

Using entire works (instead of excerpts) increases risk—but courts may allow it if the AI’s outputs don’t compete with or replace the originals.Does it harm the creator’s market?

This is the big one. The Office introduced a new idea: market dilution. Even if the AI output isn’t a direct copy, it can still flood the market with “look-alikes,” making the original work less valuable and hurt sales.

The Takeaway:

AI training can be fair use in some cases— but only when the outputs don’t undermine the value of the original work or replace it, undercutting creators’ livelihood.

Then Came the Firing 🧨

A day after the long-awaited report dropped, Trump fired Shira Perlmutter, the head of the Copyright Office who led it. Her role is one of the most influential in shaping U.S. copyright policy.

Sources say she resisted pressure from Elon Musk to allow broader, unrestricted scraping of copyrighted work for AI training—and instead supported a more nuanced, case-by-case approach.

Her removal signals a likely shift toward looser rules and tech-friendly leadership.

What this creates:

→ Uncertainty for everyone

→ A stronger chance the courts, not policy experts, will shape what’s allowed

What This Means for Creators & Publishers 🧭

Start documenting harm now. Track how AI-generated content overlaps with your work and impacts your traffic, visibility, reach, or revenue.

Lean into the “market dilution” argument. The Copyright Office just gave you language to challenge AI look-alikes that compete with your work—even if they’re not exact copies.

Push for licensing now to secure revenue. I’ve been saying this for the past year and can’t scream it loud enough: The window to lock in rights, terms, and payouts is open—but narrowing. Deals with AI companies are your best defense, and your best shot at getting paid.

What This Means for AI Companies 🛠️

Short-term relief, long-term uncertainty

Without strong federal guidance, companies using unlicensed content may face fewer immediate blocks—but they also lose clarity on where the legal red lines lie.

More lawsuits ahead

More copyright holders may sue (as dozens already have), forcing courts, rather than the Copyright Office, to define fair use for AI training.Voluntary licensing just became a smarter bet

Expect a faster push toward licensing deals with publishers and rights holders. It’s the clearest path to reducing risk— just ask OpenAI, which has already cut deals with nearly every major publisher.

We’re heading into a year where the future of AI training, licensing, and content ownership won’t be decided by a single policy—but by a mix of court rulings, licensing deals, and whoever gets confirmed next to run the Copyright Office.

What You Need to Know About AI This Week ⚡

Clickable links appear underlined in emails and in orange in the Substack app.

In 2023, OpenAI CEO Sam Altman backed the idea of a federal agency to sign off on powerful AI before release. Now he says that kind of oversight would be a “disaster.”

Tech leaders and Trump officials now agree: speed, scale, and military partnerships come first. Critics say earlier safety efforts have been quietly rolled back—even as risks like deepfakes, bias, and abuse grow.

Perplexity AI is partnering with PayPal to let users buy products, book travel, and check out directly in its chat interface—using either PayPal or Venmo.

It’s part of a growing shift into “agentic commerce,” where AI agents don’t just research your options and make a decision, but also complete the transaction.

First companies used AI to speed up hiring. Now applicants are using AI to game it.

The result? A flooded, less fair process—especially for those without access to paid tools.

Some companies are rethinking the whole flow: dialing back automation and putting human interviews and live assessments back up front.

TikTok brings video generation to the masses.

The platform is rolling out AI Alive—an image-to-video tool that turns still photos into short animated clips. The move makes sense considering ByteDance has been aggressively developing video-language models behind the scenes.

You won’t get the precision of dedicated video-gen tools, but AI Alive wins on accessibility and ease of use—anyone can try it right inside the app.

Here comes even more AI slop…

📤ChatGPT’s Deep Research reports are now easier to share.

You can now download ChatGPT’s Deep Research reports as well-formatted PDFs—complete with tables, charts, images, linked citations, and sources.

Just click the share icon and select ‘Download as PDF.’

A huge time-saver for anyone sending research to clients, teams, or collaborators.

Audiobooks used to take weeks. Now they can be ready in hours.

Audible’s new AI tools let publishers pick a voice, upload a script, and publish—across multiple languages and regional accents.

Publishers can manage the process themselves—or let Audible handle everything from narration to publication.

Translation tools are next, including AI that preserves a narrator’s voice across languages.

It’s a play to grow its audiobook catalog fast—as Apple, Spotify, and others ramp up.

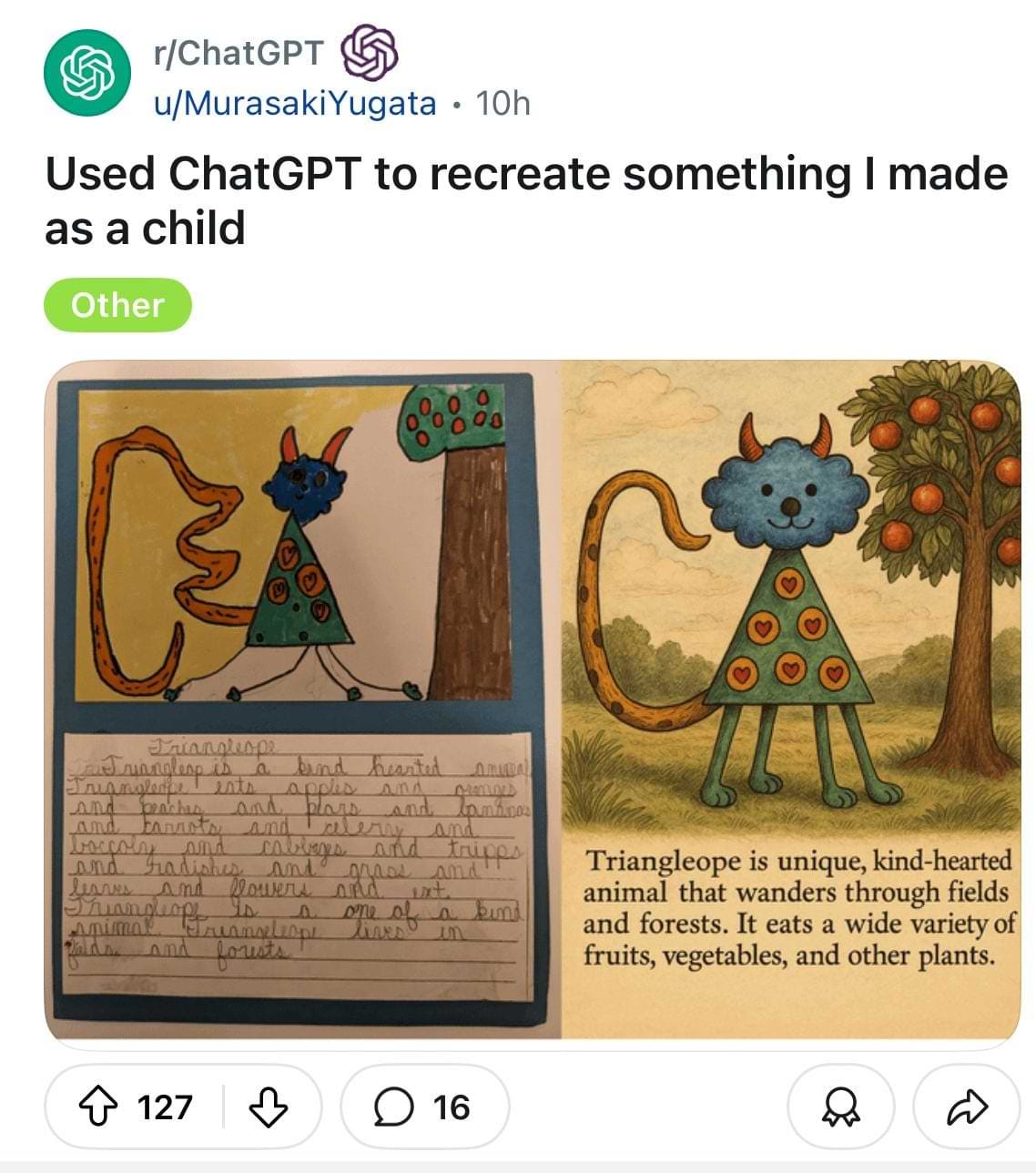

There’s something so beautiful and magical about this.

Someone used ChatGPT to recreate a character they dreamed up—and drew—when they were a kid.

Same imagination. A new canvas.

Triangleope lives. 💙

Jamie Lee Curtis publicly called out Meta after a “totally AI fake” ad used her likeness without consent.

The post—which overlaid fake quotes on a real MSNBC interview image—was removed after Curtis posted an open letter to Zuckerberg on Instagram.

In case you missed last week’s edition, you can find it 👇:

🤓 The Lie of Easy AI

There's always a moment about 45 minutes into my workshops when the energy shifts.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 20+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).

This is an incredibly well written post! I really admire your indepth and well researched post.

I also jumped on the AI content and decided to post one about using it to get a job. What do you think about that?