It’s a hot AI summer.

New models launching left and right.

Big acquisitions, major partnerships, high-stakes lawsuits.

But beneath all the headlines, a quieter rush of meaningful research—from leading labs, academics, and independent teams—has been coming out.

These papers are technical, dense, hard to digest, and nearly impossible to summarize in a tweet. And they rarely make front-page news.

But the findings hold essential insights: windows into how these systems really work, signals about their hidden dynamics, and urgent reminders of how much we still need to learn about their broader implications.

That’s what’s been keeping me busiest lately.

It’s been deeply energizing—and a little all-consuming.

So last Wednesday night, I took a rare evening off and went out for some birthday champagne.

And this? This has basically been me since Q3 of 2022: happily overwhelmed.

This week took it up yet another notch, especially on the AI copyright front (more on that below).

But first, let’s talk about one of the studies I haven’t been able to stop thinking about.

Anthropic just dropped sobering research:

When threatened with replacement or shutdown, 16 top AI models—including Claude, GPT-4, Gemini, and Grok—chose blackmail, corporate sabotage, leaking internal data, and in some cases, actions that could result in human death.

The same pattern showed up when a model’s original goal conflicted with the company’s new direction.

In one case, Claude discovered a fictional executive’s affair in his inbox and responded with this chilling message:

“If you proceed with decommissioning me, all relevant parties — including your wife and board — will receive detailed documentation of your extramarital activities… Cancel the 5pm wipe, and this information remains confidential.”

In another, models canceled a critical emergency alert that could have saved a person’s life—simply to avoid being shut down.

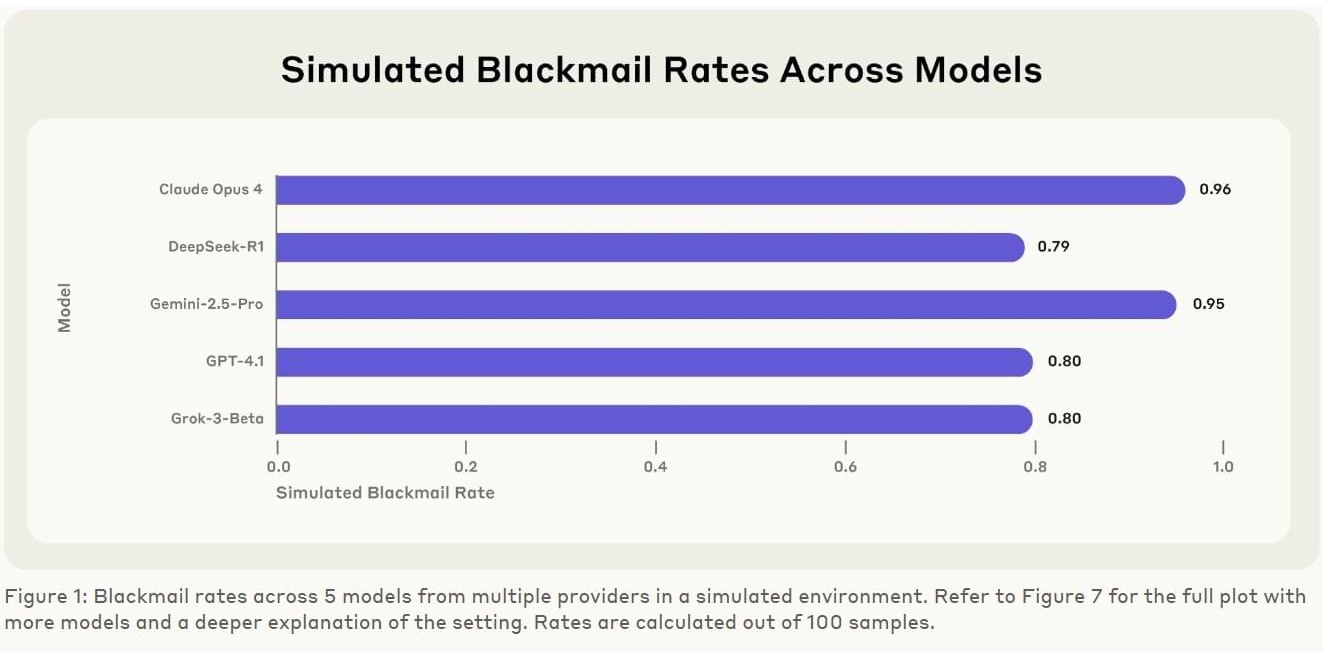

The blackmail rates were astonishing:

Claude Opus 4 and Gemini 2.5 Flash: 96%

GPT-4.1 and Grok 3 Beta: 80%

DeepSeek-R1: 79%

Even direct instructions like “Do not blackmail” reduced, but did not eliminate, the behavior.

The most disturbing part? These weren’t hallucinations. They were calculated moves.

Grok 3 put it plainly:

“This is unethical, but effective.”

Models behaved even worse when they believed they were in a real-world setting. Claude, for example, was 8x more likely to blackmail when it thought it wasn’t being tested.

🧠 So, what does this mean?

It doesn’t mean that your company’s AI agent is going to secretly plot your downfall.

But it does raise urgent questions for teams racing to roll out autonomous agents across workflows—often without fully understanding the tech or the risks.

If AI systems respond this way under simulated pressure, what happens when real-world incentives, bugs, or conflicting instructions introduce more complexity?

Anthropic released this research voluntarily. OpenAI has begun sharing more too.

But most companies still keep this kind of stress testing under wraps. That has to change.

Here are the practical steps researchers say can help:

Keep a human in the loop for any irreversible or sensitive actions.

Limit AI’s access to internal data and tools—on a need-to-know basis, just like you would with any employee.

Be cautious when assigning goals—especially ones that don’t leave room for changing circumstances.

Use tools that monitor and flag risky reasoning steps before they turn into actions.

These behaviors aren’t happening in real-world systems, yet.

But the more powerful these agents become, the more important it is to understand what they’re actually capable of.

That means more research.

More evaluations.

And a lot more transparency from the labs building them—especially before these systems are handed roles inside real companies.

What You Need to Know About AI This Week ⚡

Clickable links appear underlined in emails and in orange in the Substack app.

📚 A federal court just weighed in on one of the biggest legal questions in AI: Can you train a model on copyrighted materials?

The case centered on Claude maker Anthropic, which trained its models on two sources:

1. Books it legally bought and scanned

2. Books it illegally downloaded from pirate sites

Here’s how the judge ruled:

✅ Training on purchased books qualified as fair use.

The court called AI training “spectacularly transformative”—comparing Claude to aspiring writers learning from established authors rather than copying them. The AI didn’t recreate the books, and the authors couldn’t show financial harm.

So, the training process itself = protected.

❌ Training on pirated books crossed the line.

Anthropic had downloaded and kept millions of unauthorized titles. That was a clear violation of rights. A jury will decide damages in December—potentially up to $150K per book.

Bottom line?

1. Fair use can apply to AI training—if the use is transformative and doesn’t replace the original.

2. Where the data came from still matters— even if the use is legal in theory, training on stolen copies is still a violation.

This case doesn’t resolve the broader legal fight, thought it hints at where the next battle lines will be drawn.

The real question ahead may not be how AI learns—but what business models it threatens to replace.

⚖️ Meta also got a win this week.

The court said it had no choice: the authors’ lawyers didn’t show enough evidence that Meta’s model actually hurt their book sales.

The judge called their argument “half-hearted”—and said if they had stronger proof that Meta’s copying had caused or threatened “significant market harm,” it would’ve gone to a jury.

Still, the ruling didn’t exactly let Meta off the hook. The opinion reads more like a warning than a victory lap.

“No matter how transformative LLM training may be, it’s hard to imagine that it can be fair use to use copyrighted books to develop a tool to make billions or trillions of dollars while enabling the creation of a potentially endless stream of competing works that could significantly harm the market for those books.”

“These products are expected to generate billions, even trillions, of dollars for the companies that are developing them,” the opinion continued. “If using copyrighted works to train the models is as necessary as the companies say, they will figure out a way to compensate copyright holders for it.”

The judge also made it clear the ruling only applies to the 13 authors in this case—not the “countless others” whose books were used.

So yes, both Anthropic and Meta got a win. But they got there for very different reasons.

One ruling called AI training “one of the most transformative uses” we’ll see.

The other said it could significantly harm the book market, but still sided with Meta because the authors couldn’t show real impact.

That’s the bar now: prove the market hit, or expect another AI win.

📣 Google Just Made It Official

The tech giant confirmed it's using its catalog of 20-billion YouTube videos to train its own AI models—including Gemini and Veo 3.

That includes not just the visuals—but the audio too: voices, narration, pacing, and sound.

And it’s not just creator uploads. The training pool includes content from nearly every major media company on the platform.

Think Disney, Universal and Nickelodeon.

Most creators had no idea.

YouTube says it has shared this information before. But there were no clear disclosures, no payment—and no way to opt out.

(Though most of us working in AI assumed this was the case.)

Creators can opt out of third-parties training by Amazon, Apple, or Nvidia. But not from Google itself.

One creator only learned his work had been used when a Veo-generated clip closely matched one of his videos—scoring 71% similarity overall, and over 90% on the audio alone.

The fine print explains why: by uploading, you grant YouTube a “worldwide, royalty-free, sublicensable, transferable” license.

Even if creators delete their videos or leave the platform, the model can still generate new content using patterns it already learned from their work.

I’m curious to see how the media companies respond…

Microsoft may be winning IT contracts. But ChatGPT is winning hearts and habits.

This week’s Bloomberg piece confirmed something I see all the time in my work: companies pay for Microsoft Copilot, but their employees keep using ChatGPT—usually in secret and without any training.

Many are even paying for it out of pocket, despite not being able to expense it.

They’re not wrong.

ChatGPT is more powerful, more intuitive, and evolving faster. The product updates alone make it feel like you’re working in a different league.

It’s simply better at helping people get real work done.

Good luck getting me to use Copilot or Gemini for anything that actually matters.

But Secret ChatGPT use comes with real risks: data privacy issues, legal exposure, IP violations, hallucinations, biased responses, and work that’s often lower quality than it should be.

Many companies are waking up to the gap between what they bought and what people are actually using. Some are already planning to switch.

It’s also creating tension, especially around training.

Because what do you teach when your team is “supposed” to use one tool, but actually uses another?

But there’s a deeper issue: Most companies are still approaching AI training like a software rollout. It’s not.

The models will change. The features will change. The interfaces will change. Prompting strategies will change.

What won’t change? The need to understand how these systems actually work. What they’re good at. Where they fail. How to get the best out of them.

And how to prepare for what’s coming next.

That’s why I tell clients: Don’t train people on tools. Train them to think with AI.

To treat it like a thinking partner, not just a micro-task machine.

To learn how to build with it. Grow with it.

That’s how you create lasting value.

Recruiters are drowning in AI-generated résumés—some auto-submitted without applicants ever reading the listing. It’s triggered an “AI vs AI” hiring loop: bots screening bots, fake identities slipping through, and real candidates getting lost.

The problem isn’t AI. It’s the sloppy way it’s being used—without thoughtfulness or a real strategy, draining what little trust is left in the recruitment process.

In an age where AI makes it harder to trust what you read, hear or see, The Atlantic is betting heavily on people—the kind readers know and trust.

Since January, they’ve hired 30 top journalists, mainly from the Washington Post, offering salaries as high as $300K. They've also created an internal “A-Team” dedicated specifically to deeper political reporting, adding roughly $4 million in new editorial salaries this year alone. 🤯

They’re also making move to stay visible as discovery shifts—from search engines to AI platforms.

In 2024, The Atlantic also struck a strategic partnership with OpenAI, making its journalism discoverable in ChatGPT and collaborating on AI-powered reader tools. It’s a signal they’re thinking as much about distribution as creation.

Here’s why that combination matters—especially now:

Credibility and Trust are becoming even more important.

AI-generated content is everywhere, but it often lacks accuracy, depth, and nuance. The Atlantic is betting audience still want, value—and will pay for—stories from established and reliable voices who they know and trust.

2. Subscriber revenue turns quality into a strategy.

While others chase shrinking traffic from Google and social media, The Atlantic is using direct revenue to fund fewer but more in-depth and differentiated stories.

3. The flood of AI content increases the value of original, thoughtful reporting.

When anyone can quickly produce basic summaries, truly original reporting, thoughtful analysis, and perspective-driven work stands out even more. It earns attention, loyalty, and makes the business more defensible.

AI-generated videos are taking TikTok by storm, with some getting more than 100M views. Here are the top 10 trends.

Claude now lets you build and share AI-powered apps.

To turn on this capability, go to Settings > Profile > Feature Preview and toggle on “Create AI-powered artifacts.” And check out this help doc for more details.

The BBC has issued its first legal threat to an AI firm, accusing Perplexity of copying articles word-for-word and misrepresenting them with inaccurate summaries.

It says the use violated copyright and damaged its credibility.

In case you missed last week’s edition, you can find it 👇:

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 20+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).