Quick Reminder:

📅 My AI for Non-Techies workshop is coming up on Friday, May 2nd.

We’ll focus on the most essential, practical, and immediately useful strategies to help you get dramatically better results from AI.

I’ll also walk through the powerful new models OpenAI just launched (o3, o4-mini) and the newest features like ChatGPT Memory, Projects, and Deep Research—tools that open up entirely new possibilities.

📌 Full details are at the bottom of this newsletter. If you’d like to join, sign up below.

Heads up: Registration closes tomorrow (Saturday, April 26 at 10am PST).

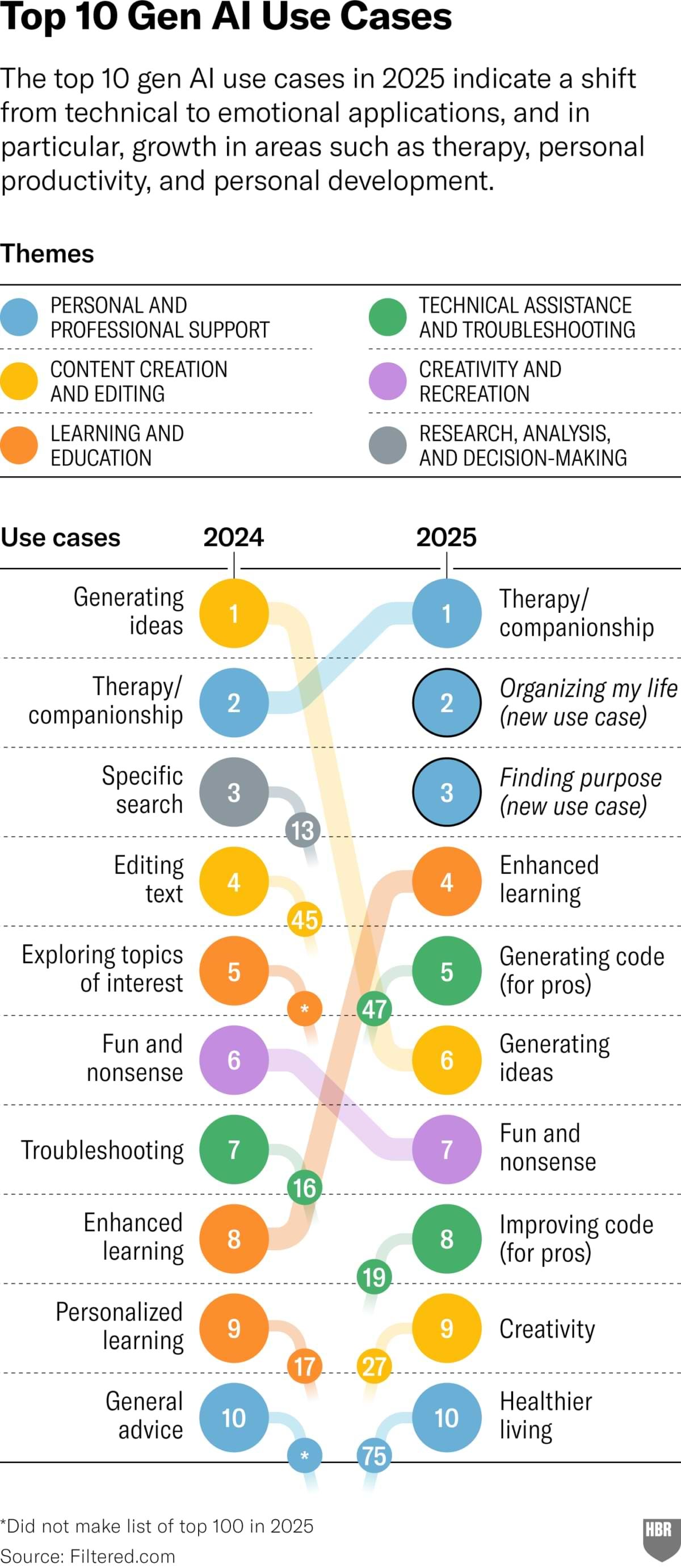

If you assumed the top use for generative AI in 2025 would be copywriting, creating decks, or coding—you’d be wrong.

It’s talking to AI about your feelings.

Harvard Business Review just published an updated report on the actual top 100 Gen AI use cases— this time based on hundreds of Reddit threads (my fave), Quora posts, and forum comments.

Yes, that means the data comes straight from the messy, unfiltered corners of the internet—where people are more likely to say what they’re actually doing with AI, not what they think they’re supposed to say.

In other words, not PR case studies or cherry-picked company wins.

Here’s what rose to the top.

Top 5 Gen AI Use Cases in 2025

(Ranked by perceived usefulness and impact. [NEW] = didn’t appear in the 2024 list.)

Therapy / Companionship

In places where mental health resources are hard to access—or unaffordable—AI has become the closest thing to a lifeline for many. It’s available 24/7 and accessible to anyone with an internet connection. It doesn’t judge. It doesn’t get tired. And it doesn’t ask for insurance.

Organizing my life [NEW]

People are using AI to get more clarity on their goals—whether that’s building new habits, sticking to their New Year’s resolutions—or finding ways to get started with them.

Finding purpose [NEW]

AI is helping uncover and define their values, reframe problems, and get guidance on how to make decisions that are aligned with what actually matters to them.

Enhanced learning

Think personalized study guides, instant explainers, and better note-taking.

Generating ideas

From business names to book plots to birthday party themes, it’s a brainstorming partner that turns vague thoughts and oddly specific asks into instant possibilities.

What This Tells Us (My Take)

➤ Emotional Needs Are Driving Real Adoption (and Real Value)

Three of the top five use cases—therapy, organizing your life, and finding purpose—aren’t focused on productivity. Instead, they directly address deeper psychological needs: connection, clarity, and belonging.

🎯 Strategic takeaway: Understanding this emotional layer is essential for any business serious about adopting or investing in AI. Productivity gets you trials; emotional resonance gets you loyalty.

—

➤ Individuals (Not Companies) Are Driving Adoption—for Deeper Reasons Than We Thought

Corporate adoption is slow. Companies are understandably cautious because of legal risk, privacy concerns, regulatory pressure, brand safety, and the complexity of compliance.

But that’s likely only part of the story.

Many of AI’s most meaningful uses right now are personal—The kinds of things people do alone, when no one’s looking, just to feel a little more okay.

These aren’t features you roll out across an org. They’re things you try at 1am when you can’t sleep.

🎯 Strategic takeaway: The real competitive edge comes from deeply understanding the emotional and psychological contexts where consumers naturally engage—revealing the future of market demand long before it reaches the boardroom.

—

➤ Reddit & Quora Reveal the Unfiltered Truth

Unlike polished case studies, these insights were pulled from thousands of user-generated posts—many anonymous, all unsolicited. This gives us a window into how people are actually using AI when on one’s watching.

That’s why therapy tops the list, and “writing appeal letters for parking tickets” made the top 100. These are the use cases that surface when people are being honest.

🎯 Strategic takeaway: Real-world AI applications are often unexpected, deeply personal, and reveal market demands invisible to traditional research.

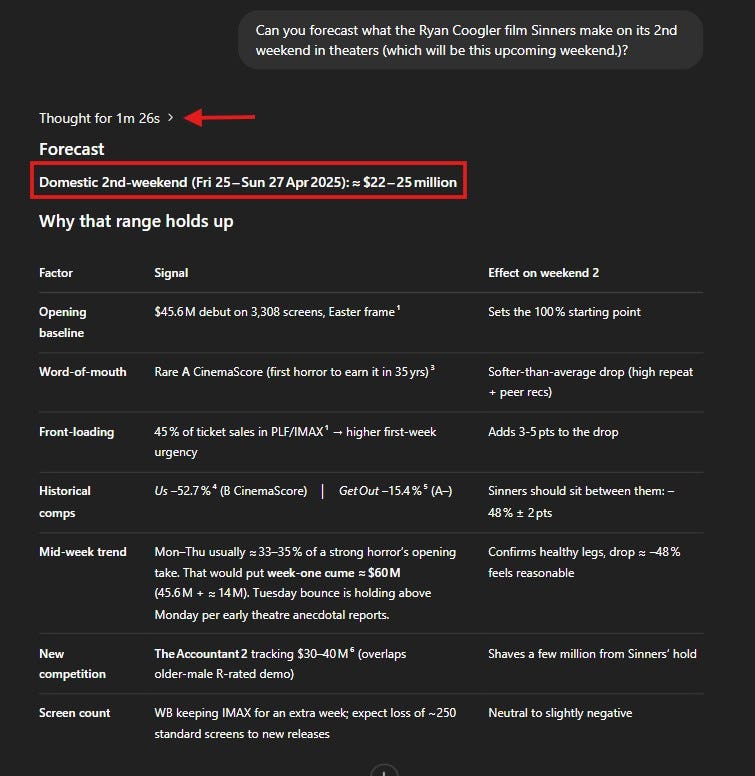

Can ChatGPT forecast the box office? 🍿

OpenAI recently launched its most advanced reasoning model, o3.

With real-time web search now built-in, its ability to think through complex problems just got a big upgrade.

I wanted to see what that meant in practical terms—so I put it to the test.

Right after Sinners opened to $45.6M domestically this weekend, I gave it a challenge:

Forecast the film’s second-weekend box office.

It thought for 1 minute and 26 seconds, ran multiple real-time web searches, and came back with this:

$22–25 million (for April 25–27).

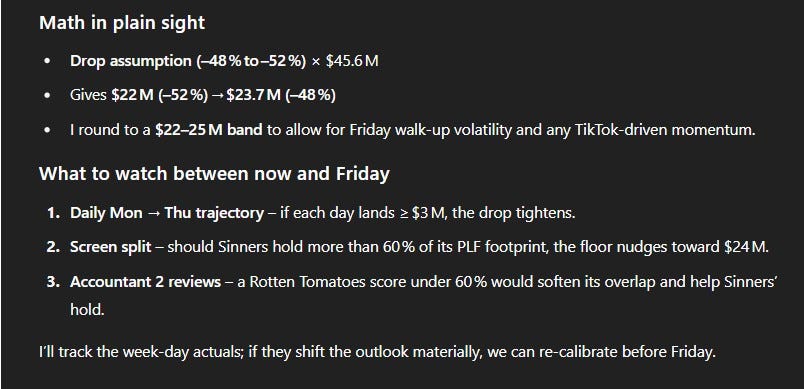

But the real story was how it got there. It:

💠 Pulled real-time data: Weekend grosses, weekday drops, CinemaScore ("A"—rare for horror), premium screen share, and tracking for new releases like The Accountant 2

💠 Benchmarked against historical comps: Us (–53%) and Get Out

💠 Cleary outlined assumptions, volatility factors, and conditions to revise its estimates: It landed on a –48% to –52% drop, leaving room to adjust based on mid-week trends and social momentum.

And it did all of this using only publicly available data—no added context or detailed instructions.

The analysis would much stronger with expert input, internal data, and a well-structured prompt.

The use case here is box office. But the implications go far beyond it.

If your work involves strategic planning, competitive analysis, or making sense of shifting market signals, these models are starting to become genuinely useful.

You don’t need to bet the business on them.

But you do need to start testing where they can sharpen your edge.

Will it nail this forecast? We'll see next weekend.

Stay tuned.

What You Need to Know About AI This Week ⚡

Clickable links appear underlined in emails and in orange in the Substack app.

ChatGPT now personalizes your web searches.

As I mentioned last week, OpenAI’s memory feature now works alongside other capabilities—including web search.

That means ChatGPT now factors in what it already knows about you—your preferences, habits, taste, location—to rewrite the search query based on your past conversations and make the results more relevant.

So, if you’ve mentioned that you’re vegan, live in SF, and prefer casual spots you can walk to, a vague prompt like “Where should I eat tonight?” might get reinterpreted as “casual vegan restaurants in the Mission District, walkable.”

Not what you typed—but exactly what you meant.

For consumers, every search gets smarter—because it’s shaped by what you care about. That means better answers with less effort.

For marketers, this is a big shift. The AI acts as a gatekeeper, filtering options based on relevance. If it doesn’t see your products, services or content as the right fit for that user’s context—intent, preferences, budget, lifestyle—you don’t show up, so you’re not even in the running.

There are A LOT of moving parts—but you start by understanding how this tech works so you can begin to reshape your strategy.

The personalization shift is just one piece of a much bigger disruption.

AI agents—powered by LLMs (Large Language Models like the ones powering ChatGPT) are increasingly taking over many parts of the customer journey: researching options, summarizing reviews, comparing products, and even narrowing down shortlists before a human ever clicks your site.

Bain & Company just published a breakdown of what this means: Marketing’s New Middleman: AI Agents.

If you're in brand strategy, marketing, content, or search, stop what you're doing and read it.

It’s one of the better explainers I’ve seen on how AI search is changing the way people discover, compare, and make purchase decision—and why marketers need to rethink their digital strategy for a future where the AI agent—not the buyer—is the real audience.

One part I’m especially excited about:

“Within LLMs, expert opinions, earned media, and customer or forum commentary carry greater weight in search results, because the LLMs seek to validate the claims of branded company sites.”

This is huge opportunity for brands who genuinely understand how influence actually works.

🤔 What People Are Actually Doing in AI Search

Google’s head of Search, Elizabeth Reid, just offered a rare inside look at how search behavior is changing: longer, more detailed questions and a clear shift toward trusting answers grounded in real, human voices.

This is another must-read for brand strategists and marketers.

OpenAI upped usage caps for ChatGPT Plus subscribers.

💠 o3: 100 messages/week

💠 o4-mini-high: 100/day

💠 o4-mini: 300/day

💠 Deep Research: 25 queries/month

I honesty can’t believe we get this much intelligence for just $20 a month. 🤯

The Washington Post just became the latest major publisher to partner with OpenAI.

ChatGPT can now surface “summaries, quotes, and links” to Post stories in response to relevant queries.

It joins a growing list of partners—including the FT, News Corp, AP, Axel Springer, The Atlantic, and more—as publishers race to stay visible in an AI-first search world.

ChatGPT now has 500M active weekly users.

For publishers, showing up in those answers may be the new front page.

Claude maker Anthropic has been researching the possibility of AI consciousness—and what ethical responsibilities might follow.

They’re asking questions like:

Could future AI systems actually become conscious?

If so, what does ethical treatment look like?

Should an AI be allowed to end an abusive interaction?

We’re not there yet—but the fact that serious people are even asking says a lot about where this is headed.

A new study shows that while ChatGPT is strong at math and logic, it often makes judgment calls the same way people do—and with the same blind spots.

In nearly half the tests, it showed classic human decision-making biases, including:

Overconfidence: It overestimated its own accuracy.

Risk aversion: It avoided risk, sticking with the safer option—even when the riskier one had a clearly better payoff.

Gambler’s fallacy: It made decisions based on past outcomes—assuming a pattern (like “this has happened three times, so it won’t happen again”) even when each choice should’ve been treated independently.

Researchers say these patterns suggest that without human oversight, AI may actually scale bias and bad decisions.

Meta used millions of pirated books to train its AI—then argued in court that “none of Plaintiffs’ works has economic value, individually, as training data.”

Newly unsealed filings show staff removed author credits, avoided legal review, and called licensing “a waste of time.” The case is ongoing, with the authors suing over what they call systemic theft.

This in-depth Vanity Fair piece offers a rare look at how a leading AI company is thinking about copyright, licensing, and attribution—and how that thinking is shaping its strategy, its arguments, and the rules the rest of us might have to play by.

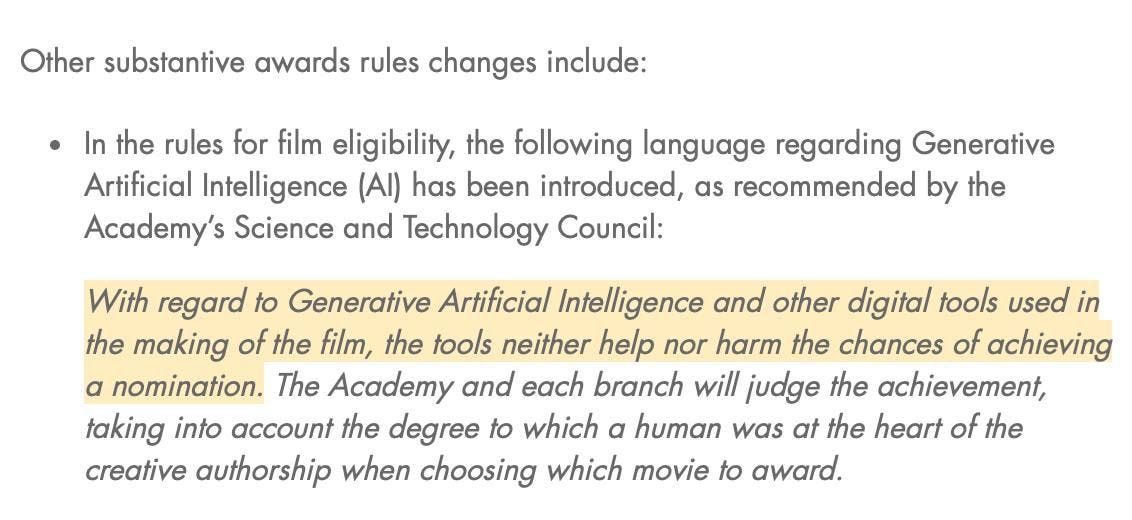

🥇 Oscars Say AI Is OK—Sort of

In its latest rule update, the Academy of Motion Picture Arts and Sciences officially addressed generative AI—for the first time.

The updated “Eligibility” section, which applies to next year’s Oscars, includes the following:

"With regard to Generative Artificial Intelligence and other digital tools used in the making of the film, the tools neither help nor harm the chances of achieving a nomination.

The Academy and each branch will judge the achievement, taking into account the degree to which a human was at the heart of the creative authorship when choosing which movie to award."

In short: AI doesn’t disqualify a film. But the more human, the better your shot at winning.

It’s good to see some clarity here—even if the edges are still fuzzy. The creatives doing the work deserve to know where things stand.

Claude maker Anthropic says we’re less than a year from seeing AI “employees” working for companies—complete with their own roles, memory, system access, and the ability to act on their own.

That kind of independence raises major security and accountability questions most companies aren’t remotely ready to answer.

A Columbia student suspended for building an AI tool to cheat on job interviews just raised $5.3M for a startup promising tools to “cheat on everything.”

ChatGPT Search use in Europe has quadrupled in six months—putting it on track to trigger strict EU rules that would force OpenAI to explain how it works, let users opt out of personalization, and share more data with regulators.

The White House is considering a draft executive order that would bring AI into K–12 classrooms—training teachers across subjects, funding school programs, and encouraging partnerships with industry.

It’s not final yet, but for parents, it’s a sign of what’s (hopefully) coming.

I hope it comes real soon.

Now someone just needs to train the parents. 😁

AI for Non-Techies Workshop Series:

📋 Each workshop series includes:

A Live 4-hour Interactive Session (no on-demand viewing)

An optional one-hour Office Hours session dedicated to answering your questions

You'll Walk Away With:

🎯 Practical Know-How and Confidence: You’ll have all the knowledge you need to start experimenting and working with AI.

🎯 Discovery of Personalized Use Cases: You’ll uncover ways to use AI for your specific needs for work or personal projects—whether that’s research, improving your writing and editing process, brainstorming, or summarizing long and complex documents (among many other uses).

🎯 Hands-On Experience with ChatGPT: We'll do an interactive walk through of ChatGPT's updated interface and newest features (including Projects, Deep Research and Memory) so you’ll feel more familiar and comfortable with its latest layout and features, and can navigate it with ease.

🎯 An Essential Cheat Sheet: A takeaway resource packed with key insights, strategies, techniques and examples from the session—designed to help you keep putting what you’ve learned into practice.

—

📅 Dates: Workshop Series E – May 2025

Session: Friday, May 2nd -- 12:00 PM - 4:00 PM PST I 3:00 PM – 7:00 PM EST

Office Hours (Optional): May 4th -- 12:00 PM - 1:00 PM PST I 3:00PM – 4:00PM EST

--

What We’ll Cover

✅ Intro to Large Language Models (LLMs) like ChatGPT: What They Are & How They Work

✅ The Limits of LLMs: What They Can’t Do

✅ Prompting Strategies + Examples: Your Guide to High Quality Results

✅ Walkthrough of ChatGPT’s Updated Interface and Newest Features

✅ Best Practices for AI Interactions: Do’s and Don’ts

✅ Top Performing Models: Which Should You Use?

---

Investment: $329

I’m limiting the number of spots to keep our sessions more interactive and personalized.

If you're interested, be sure to register soon to secure your spot. They’re available on a first-come, first-serve basis.

Got questions? Just hit reply and ask, or check out the FAQs at the bottom of the page here.

If you're interested in a private group session, reach out to me directly at avi@joinsavvyavi.com.

And feel free to share this invitation with friends who might be interested.

P.S. If money is tight or you're between jobs right now, I get it and want to help. I'm convinced that AI skills are essential to navigating the shifting job market and opening up new (and previously unimaginable) possibilities. So just reach out, and we'll work something out.

In case you missed last week’s edition, you can find it 👇:

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 25+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).

That's a fascinating piece of information AVi, that therapy is the most popular use of AI. I wonder what the implications are... that therapy is too inaccessible and/or expensive for so many who need it? So AI models can step in and fill the gap.

My other burning question is, does AI actually make a good therapist? In the realm of dream analysis, I know the AI models do a pretty good job of offering plausible possible meanings of our dreams... but a pretty lousy job at filling our basic need for human connection. I wonder if it becomes like social media - a pseudo-connection that helps just enough, but because of this, might lead to further isolation because we are less inclined to reach out to a real person. I'm reminded of the movie, SHE. I wonder what you, and others think.