🤓 GPT-5 Is Here. And It’s a Big Deal.

PLUS: This Week in AI

For AI super-nerds like me, yesterday felt a little like Christmas morning.

🎁The long awaited GPT-5 finally arrived.

It’s the most capable, intuitive and reliable AI model released so far and it’s the easiest to use well.

It’s smarter across the board, from deep analysis to creative work, with far fewer “hallucinations” (confidently making things up).

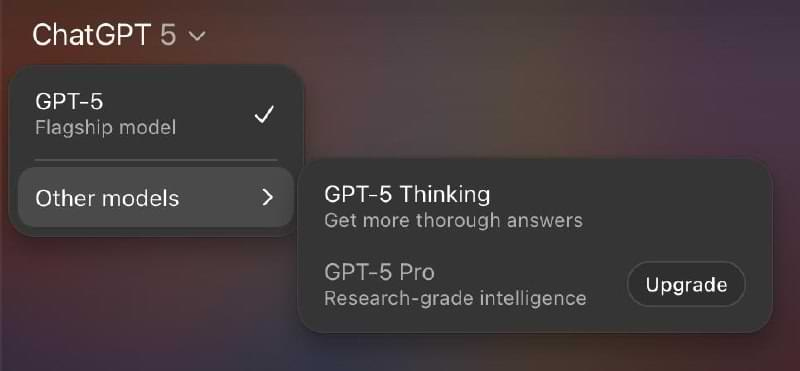

GPT-5 is replacing all other models in ChatGPT.

And for the first time, you don’t have to choose the right model for each task.

When you give it a question, GPT-5 decides how much thinking it needs to do and a new built-in router picks the right mode based on conversation type, user intent and complexity:

GPT-5: for most tasks

GPT-5 Thinking: for more complex reasoning and harder problems

You can also manually choose thinking mode (with usage caps), but for most people, this means one less thing to figure out while still getting high quality results.

(So yes—if you read last week’s deep dive on which ChatGPT model to use, it’s already obsolete. That’s the pace we’re moving at. But strategy and thinking behind it still matters, especially with “Thinking” mode sticking around.)

GPT-5 is also more proactive, offering follow-up ideas instead of waiting for you to think of the next step.

It’s rolling out now to all tiers, including Free, Plus, Pro, and Team users— with usage limits depending on your plan.

Message caps:

Plus ($20/month): 200 “Thinking” messages/week, plus 80 GPT-5 messages every 3 hours

Free: 1 “Thinking” message /day, plus 10 GPT-5 messages every 5 hours

A small group of early testers are raving about it already, though I’ll need a week or so of hands-on time before I share my verdict.

Until then, happy exploring.

P.S. If you run into questions with the new setup, hit reply and ask. I’ll share answers to the most common ones next week.

What You Need to Know About AI This Week ⚡

Clickable links appear underlined in emails and in orange in the Substack app.

Also, on this week’s edition of “OpenAI Eats the World”:

OpenAI released its first open-weight model in six years—a free GPT you can download and run directly on your own computer.

It’s called gpt-oss, and runs locally, so your data stays private. It also works on everyday laptops without special hardware or even Wi-Fi.

Open means the model’s underlying code and design are publicly accessible, giving anyone the ability to study it, adapt it, and run it themselves.

Why does that matter?

For individuals: It makes it possible to use AI agents to do things like browse the web, manage files, or handle private documents, health data, and sensitive tasks without handing over personal information.

For organizations: Especially in regulated fields like healthcare, finance, or government, it offers a way to use AI securely within their own systems, customize it for their needs, and keep data fully in-house.

—

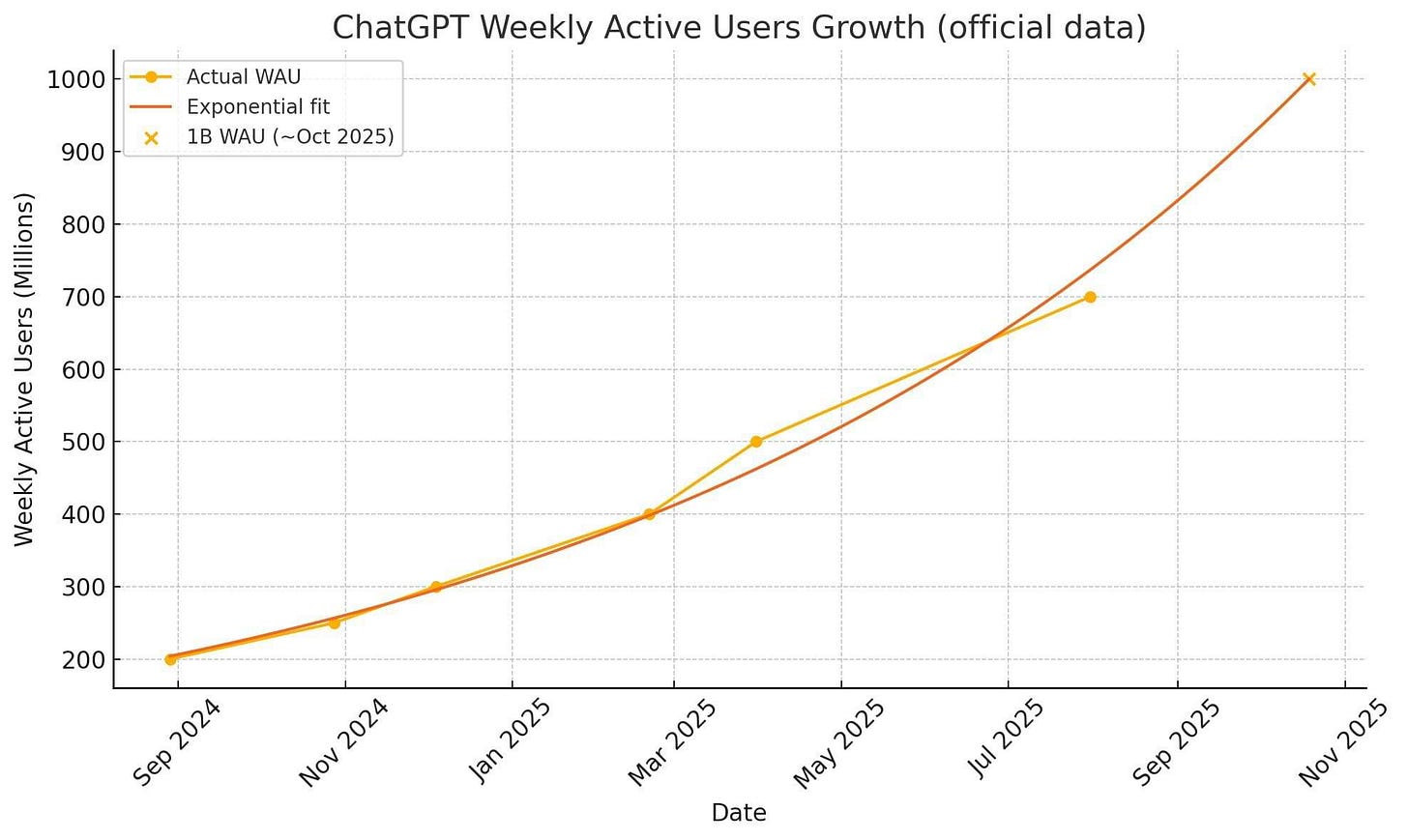

ChatGPT is set to hit 700M weekly active users this week (up from 500M in March) and is on track to hit one billion by October.

If you’re in brand strategy, marketing, comms, or PR, and you’ve been reading this newsletter, you know this jump in active users signals how quickly consumer behavior is changing—and why your strategy should already be evolving.

Hopefully, it already has.

Meanwhile, OpenAI is in talks for a $500 billion share sale (up from $300 billion just this spring 🤯), giving current and former employees a chance to cash out. If the deal goes through, OpenAI would pass SpaceX as the world’s most valuable private company.

The company has also struck a deal with the U.S. government to provide ChatGPT Enterprise to every federal agency—for just $1 per agency for the next year

🎵 ElevenLabs just launched an AI music generator it claims is cleared for commercial use.

The startup best known for its eerily realistic AI voices says its new model can create fully customizable songs in seconds: you choose the genre, mood, and structure, then tweak individual sections, swap lyrics, or add vocals.

It also works in multiple languages, from English to Japanese. Take a listen 👇.

ElevenLabs says the model is trained only on licensed and public-domain tracks, including catalogs from publishers like Kobalt and Merlin.

But it isn’t sharing its training dataset. So for now, trust hinges on exactly that: trust.

If that claim holds, this could unlock a wave of AI-scored ads, trailers, playlists, and creator content without the legal gray zone haunting rivals like Suno and Udio, now being sued by the music industry.

ElevenLabs sees its opening: become the “legally safe” alternative.

For brands, even the perception of “safe” is powerful. It offers an answer to the Legal-department dread that’s slowed AI adoption in production.

🚩 One caveat: Unlike Microsoft Copilot or Adobe Firefly, which promise to shoulder the risk if copyright disputes arise, ElevenLabs offers no such protection. ‘Safe’ here is marketing, not a legal shield.

First voices, then sound effects, now music.

Bit by bit, ElevenLabs is turning into a one-stop shop for AI audio.

Google had a few launches this week. I’ll start with my favorite.

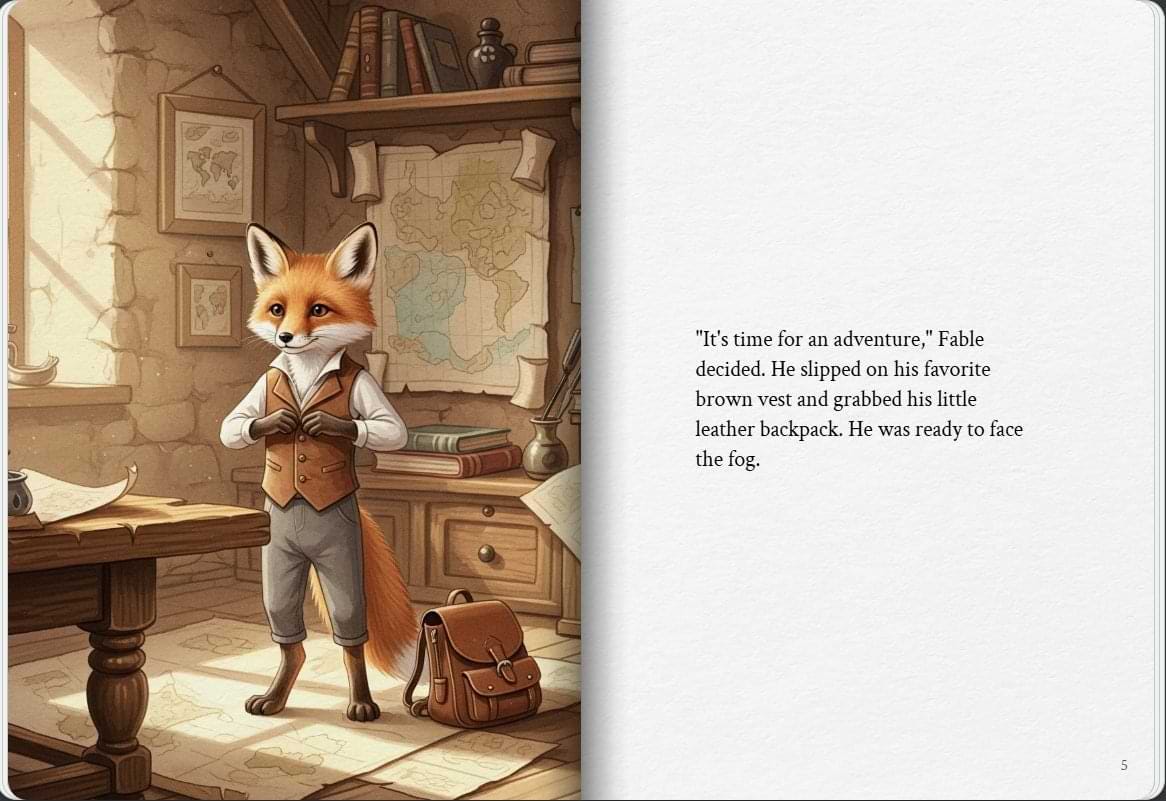

📚 Storybook (in the Gemini app)

You can now use Gemini to create personalized, illustrated 10-page storybooks about anything, and have it read them out loud.

You can pick the art style (claymation, anime, comics) and even upload photos or other images for it to reference—for example, uploading a child’s drawing and turning it into a full story.

It’s pretty amazing. You can try it here.

Here’s an example spread:

🧠 Deep Think reasoning model

Google also launched its Deep Think reasoning model inside the Gemini app, but only for those on its top-tier Ultra plan ($250/month).

Deep Think is designed to take more time thinking through complex problems using what Google calls “parallel thinking.” It brainstorms multiple ideas at once, refines them, and picks the best answer—leading to higher-quality responses for things like coding, research, creativity, and strategic planning.

🎮 Genie 3 (by DeepMind)

Google’s new “world model”—an AI system that simulates interactive environments—can generate 3D playable worlds from text prompts. These models can be used to create games or to help train robots and AI agents.

This wasn’t a real launch though. It’s only available to a handful of researchers and creators for now, while Google figures out what could go wrong.

🧑🏫 Guided Learning

Gemini’s new Guided Learning mode uses diagrams, videos, and quizzes to break down problems step-by-step and serve users more as a tutor than an answer engine (similar to ChatGPT’s Study Mode which rolled out last week.)

🤑 AI salaries have officially entered “NBA star” territory.

Meta just lured a 24-year-old researcher with a $250M offer—more than Steph Curry’s last 4-year deal.

When talent this scarce can swing company fortunes by billions, it’s no wonder Zuck is making late-night calls like a team owner begging for a trade.

Disney tried using AI in its upcoming movies Moana and Tron: Ares, but both plans were shut down—one over legal uncertainty, the other over PR risk.

For studios, ownership fights and union talks are big hurdles. But the real test will be audience tolerance, which could decide how far Hollywood actually pushes AI.

Character.AI (the app for chatting with AI characters) just added a social feed.

Users can now share chat snippets, post AI-generated images or videos, and even have their characters debate topics on a livestream.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 20+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).