Happy Friday!

This week, we’re diving into how one AI tool—just one among many—is crossing the line when it comes to copyright, creating a legal gray area that’s raising more questions than answers and sparking concerns about the future of intellectual property.

But first, here’s what you need to know about AI this week (clickable links appear in orange in emails and underlined in the Substack app)::

OpenAI's latest update has introduced an advanced voice feature (currently being tested with a small group) that lets users chat with their AI in a way that’s more lifelike than ever—and people are starting to form real emotional bonds with it. A recent report from the company flags the growing concern that these bonds can lead to emotional reliance on these seemingly genuine interactions, which could have unintended consequences for relationships with actual humans.

Google launched Gemini Live, an AI voice assistant designed to rival ChatGPT's Advanced Voice Mode. It features expressive, real-time voice interactions and a longer-than-average context window (short-term memory) for extended, multi-turn conversations.

Gemini Live isn’t free; it’s only available to Gemini Advanced subscribers through the Google One AI Premium Plan at $20 per month.

Google has also made Gemini (its flagship model) the default smart assistant across all new phones.

Artists have won a critical ruling in their lawsuit against AI image generators Stability AI and Midjourney, as a judge allowed key copyright and trademark claims to proceed, challenging the use of billions of images to train AI models. This decision opens the door to discovery, potentially exposing widespread industry practices, and could reshape the legal landscape for AI and copyright.

X’s new AI image generator, Grok, enables premium users to produce provocative, misleading, and explicit images—including deepfakes of public figures and images of copyrighted characters—with few restrictions, raising concerns over safety and misinformation.

SAG-AFTRA (Hollywood actors union) signed a “new standard” deal with AI startup Narrative to allow actors to license their digital voice replicas for ads while retaining control over usage and compensation.

Are AI companions solving problems—or creating new ones?

If you’re interested in the future of human AI relationships, this in-depth and fascinating interview with Replika's CEO, Eugenia Kuyda, is a must-read. Replika, an AI platform with millions of users, allows you to create avatars to serve as friends, therapists, or even romantic partners – all for $20 a month. Kuyda also discusses the complex ethical boundaries and societal impacts of forming emotional relationships with AI.

Personally, I believe most of these one-sided relationships may ultimately lead to deeper loneliness and isolation, even if they serve as band-aids offering short-term relief.

There’s also a sense of something dark and manipulative lurking within the AI companionship industry, but I’m curious to see how consumer reactions and adoption evolve as AI use expands.

I’ve highlighted a few noteworthy quotes 👇. This is a long one, so you can listen to the interview on the Decoder Podcast here.

“We have the largest dataset of conversations that make people feel better. That’s what we focused on from the very beginning. That was our big dream. What if we could learn how the user was feeling and optimize conversation models over time to improve that so that they’re helping people feel better and feel happier in a measurable way?”

“At one point last year, Replika removed the ability to exchange erotic messages with its AI bots, but the company quickly reinstated that function after some users reported the change led to mental health crises.”“In our lives, we have relationships with people that we don’t even know or we project stuff onto people that they don’t have anything to do with. We have relationships with imaginary people in the real world all the time. With Replika, you just have to tell the beginning of the story. Users will tell the rest, and it will work for them.”

“It’s helping them feel connected, they’re happier, they’re having conversations about things that are happening in their lives, about their emotions, about their feelings. They’re getting the encouragement they need. Oftentimes, you’ll see our users talking about their Replikas, and you won’t even know that they’re in a romantic relationship. They’ll say, “My Replika helped me find a job, helped me get over this hard period of time in my life.”

A new study finds that advanced AI models frequently produce inaccurate information, especially on complex or niche topics not well covered by their training data. Some models reduce errors by refusing to answer, but reliability remains an issue, highlighting the need for fact-checking and human oversight in high-stakes fields.

OpenAI’s collaboration with The Met’s Costume Institute brings history to life through AI, allowing visitors to chat with an AI representation of Natalie Potter, a 1930s socialite. This project, part of the "Sleeping Beauties" exhibit, showcases how AI can enhance historical and cultural storytelling by creating more immersive, interactive and connective experiences.

The FCC has proposed new rules requiring robocallers to disclose when they use AI-generated voices or texts in calls and messages.

INSIGHT SPOTLIGHT

Some AI Tools Are Ignoring Copyright Laws ⚠️

Microsoft’s Copilot (as well as the newly launched image generator from X) continues to create images of copyrighted characters for me - all from a simple prompt.

Me: Create a photo of Cartman from South Park drinking beers in a bar with his friends.

Microsoft Copilot:

Me: Create a photo of the character of Sadness, from Disney Pixar's Inside Out having drinks at a bar.

And here’s an image created by X’s Grok with the prompt: ‘create image of Vincent van Gogh's Starry Night with Winnie the Pooh in a comic book style art’.

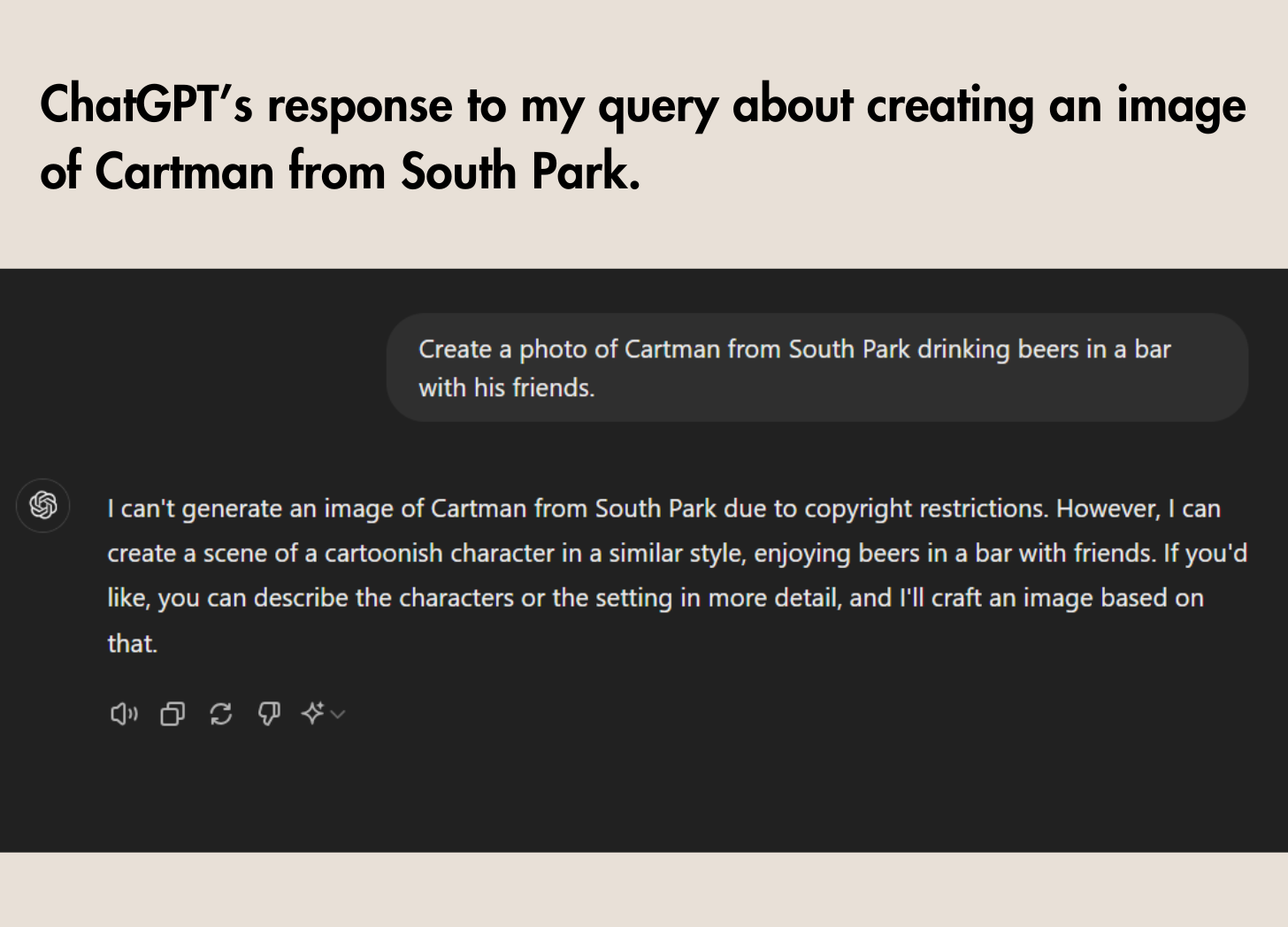

Not every tool crosses the line. OpenAI’s DALL-E (the text-to-image creator within ChatGPT) refuses to generate such images (see 👇) —showing the stark differences in how AI tools handle intellectual property.

This technology is evolving faster than our legal frameworks can keep up. It’s likely that major copyright holders are already working behind the scenes to address these issues.

But at the moment, this is the reality we are navigating.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

What a legal nightmare hahaha