Happy Friday!

What if AI could combine what it reads, sees, hears, and even apply logic all at once—like a human? This week, we explore how this capability, known as multimodal AI, is pushing the boundaries of what AI can do, making it smarter and more versatile across industries.

But first, here’s what you need to know about AI this week (clickable links appear in orange in emails and underlined in the Substack app)::

California has passed a controversial AI safety bill requiring companies developing advanced AI to implement safeguards like kill switches (emergency shutdowns) and third-party testing to prevent severe harm. While some argue this will help manage AI risks responsibly, others fear it could slow down innovation and create regulatory hurdles for tech companies. The bill now awaits Governor Newsom’s approval or veto by September 30.

Anthropic has launched Claude Enterprise, a rival to OpenAI’s ChatGPT Enterprise plan, designed for companies that prioritize data security and administrative controls. It ensures privacy by not using customer data for AI training.

With a larger context window (or short-term memory) than ChatGPT, it can process and analyze multiple long documents and extensive data in one go, helping businesses handle complex information more efficiently

I absolutely LOVE Claude—it’s my go-to AI tool for strategic planning and writing. Claude excels in critical thinking and reasoning, breaking down complex topics and developing detailed strategies. It also analyzes visual content like interpreting charts and images to deliver deeper insights.

For writing, it’s a fan favorite because it drafts natural, “human-sounding” content that stands out from other AI tools. The main drawback is its lack of internet access for real-time updates, but its overall capabilities still make it a top choice. There is a free tier which some usage caps which you can access it via the below links:

A North Carolina musician used AI to create fake songs and automated bots to stream them billions of times on platforms like Spotify and Apple Music, collecting $10 million in fraudulent royalties. This case, the first of its kind, shows how AI can be weaponized to manipulate engagement metrics, raising alarms for any industry dependent on digital performance data

Microsoft and LinkedIn’s 2024 Annual Work Trend Index shows 71% of leaders now prefer hiring candidates with “AI expertise’ over those ‘experience.” Despite the demand, only 25% of companies plan to offer AI training forcing many employees (including more senior knowledge workers) to take training and upskilling into their own hands.

Also, while 79% of leaders see AI skills as essential for competitiveness, many lack a clear strategy and worry about ways to measure productivity for the company.

⭐ My take: Regardless of your role, if your company doesn’t offer comprehensive AI training, take the initiative to find a trusted source for AI education. Understanding how generative AI works—its capabilities, limitations, and how to interact with these models—will help you get better results while minimizing risks. This knowledge is essential for the future of work (and life.)

Disney plans to launch an AI-powered app for ESPN's SportsCenter to attract younger streaming audiences by personalizing content individual preferences. The AI will dynamically generate customized sports news, highlights and narrations, making the experience more relevant, interactive and engaging.

A new video game studio founded by ex-Riot Games developers is using generative AI to transform non-playable characters (NPCs)—typically limited by scripted responses—into more interactive and dynamic elements of the game.

Players can engage in open-ended conversations with these AI-driven characters, leading to more diverse, immersive and unexpected gameplay experiences. The studio carefully guides the AI's behavior to prevent characters from acting out of context or confusing the player.

AI INSIGHTS

This is the year of multimodal AI. But what does that even mean? 🧐

Multimodal AI is a type of AI that can:

Understand and analyze various types of information across formats like text, images, audio, videos, and code.

Create new outputs across these formats.

AI image and video generators are examples of multimodal AI.

Text instructions ➡️ image or video outputs.

But here’s a practical example:

A multimodal AI takes the agenda from a meeting (text) + an audio recording from the same meeting

⬇️

Processes all the info

⬇️

Creates comprehensive meeting notes including action items + an agenda for the next meeting.

🎯 Why is this so powerful?

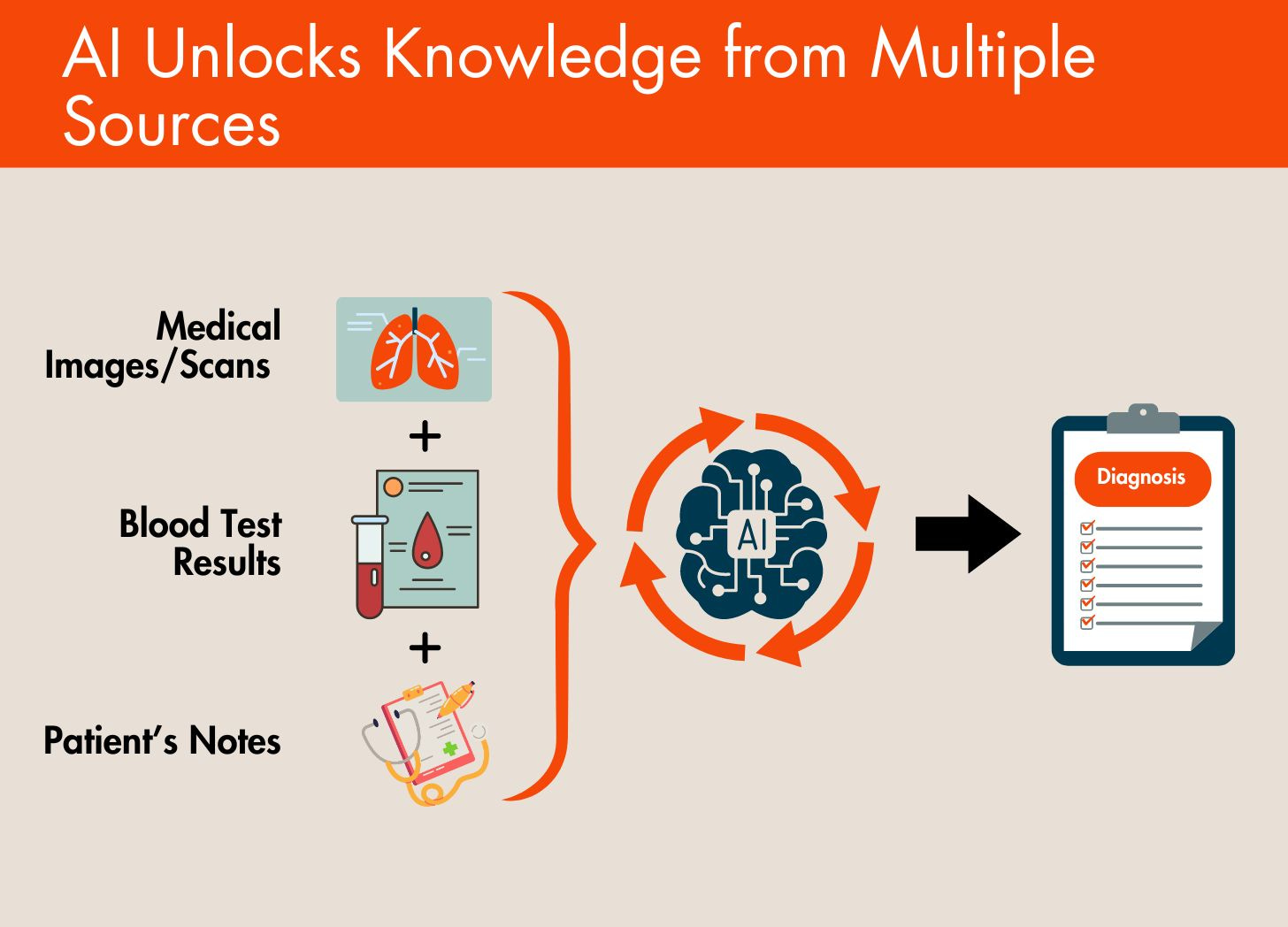

Multimodal capabilities allow AI to tap into and unlock knowledge from multiple sources, then use its advanced reasoning capabilities to understand contexts and nuances and even find new connections between all the knowledge.

This leads to a deeper and more complete understanding of any given situation, resulting in more accurate and higher-quality results.

Doctors can use AI for medical diagnosis. A multimodal AI can:

✅ Interpret medical images or scans, like an MRI

✅ Understand and analyze blood test results

✅ Recall patient notes, including long-term history, past tests, scans, and medications – all in real-time.

No one person can keep all this in mind. AI provides doctors with a more complete snapshot and patterns, so they can apply their expertise to provide a more accurate diagnosis.

Over the next few months, we'll see the launch of even more advanced multimodal capabilities and I'm excited about all this tech will unlock across various industries.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends. It takes me about 6+ hours each week to curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable.