Last November, HarperCollins announced a deal with Microsoft to license books from its backlist for AI training.

Microsoft offered $5,000 per book, with half going to authors who agreed to participate.

No negotiation—just a choice to opt in or out.

When I first wrote about this, I remember thinking: Who would take this deal?

It felt like an insult—$2,500 for work that likely took years to create.

It also felt like a branding misstep for HarperCollins.

Authors are the heart of their business, the most important relationships they have.

Why jeopardize that trust with such a dismissive offer?

But over the weekend, I came across author Alice Robb’s candid and insightful essay in Bloomberg about facing that very decision (highly recommend reading it).

What her essay captured—what I had missed—is the quiet, complicated reality of what it means to be a working writer in 2025.

Many of the authors approached weren’t actively earning royalties. Their books were sitting on backlists, gathering dust, no longer generating income.

But now, suddenly, they had a price attached—not for the work itself, but for its potential value as training data.

As one author told Robb, "Anything's better than zero, which is what we've been getting for the entire history of AI.”

And then there’s the deeper discomfort—the realization that for many, their work had already been scraped and fed into AI models, without their knowledge or consent.

We’re living through a shift so massive, it’s hard to define—because we don’t know what it will look like on the other side.

The definition of creativity and creative work is being transformed from the core, along with how we value that work.

And it’s all happening faster than we can process.

Faster than we can understand its implications.

Faster than we can legislate.

Faster than we can catch our breath.

And we’re left trying to make decisions in a world where the rules are still being written.

Robb’s essay doesn’t offer answers.

She just shows up with her questions, her uncertainty, and her honesty.

And maybe that’s the point.

We don’t know what the future of creative work looks like in the age of AI.

But we can start by acknowledging the complexity, creating space for honest conversations, and asking better questions—together.

—

Okay, let’s shake that off with a little pop quiz, shall we?

Is this photo 👇 real or AI-generated?

(No cheating—guess before you look.)

This is a real photo of the real Elon Musk with his very real four-year-old son in the real Oval Office.

Real weird that we even have to guess.

And now, here’s what you need to know about AI this week (clickable links appear in orange in emails and underlined in the Substack app):

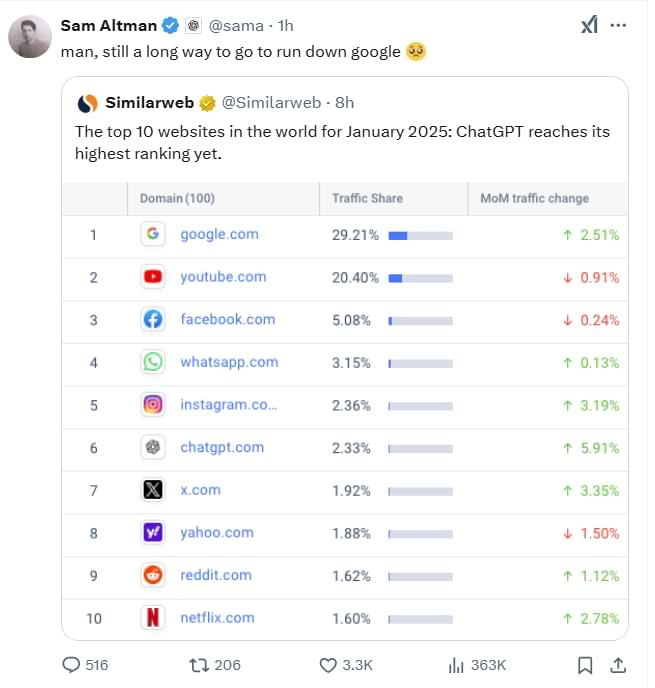

📈 ChatGPT is now the 6th highest ranking website in the world.

And it now has15.5 million paying subscribers. 🤯

In a surprise announcement, Sam Altman revealed OpenAI’s roadmap: things are about to move even faster. 🫠

ChatGPT’s multiple models and expanding capabilities have made it hard for users to navigate.

OpenAI is solving that by combining everything into one seamless system.

Here’s what’s coming:

GPT-4.5 (Orion) arrives in weeks—the last standalone model before everything merges.

GPT-5 follows in months—combining voice, vision, search, and deep research into one system.

No more picking models—ChatGPT will choose the best way to answer based on your query.

Free users get unlimited access to GPT-5, while Plus & Pro users unlock higher intelligence and capabilities.

Separately, OpenAI announced that o1 and o3-mini now support files and images.

If this all sounds like gibberish to you, don’t worry.

Here’s the bottom line:

✅ ChatGPT will be far more powerful and simpler to use.

✅ The gap between casual users and trained power-users will widen. If you know how to use these tools to their potential, you’ll have a serious advantage.

AI isn’t hard to learn, but you’ll need to learn it in layers.

It’s moving so fast that without a strong foundation, keeping up will become way too overwhelming.

But if you start now, each new capability will feel like a useful and welcome upgrade.

We are about to get handed superpowers.

How will you use yours?

---

P.S.

I honestly feel bad for most companies. It’s nearly impossible to just even keep up with major advancements each week.

And even if you manage that, you still need to understand the tech deeply enough to anticipate where it’s going—and then figure out what that means for your strategy and business before it’s too late.

Scarlett Johansson calls for deepfake ban after this AI video 👇 goes viral.

As always, Zuck's old hairdo is the giveaway. 😆

If you don’t know what I’m talking about, read this.

AI content licensing agreements now account for about 10% of Reddit’s total revenue thanks to deals with Google and OpenAI—but ads still drive the bulk of its business.

As my friend Morgan pointed out, here’s the math:

10% of revenue = $130M

Google pays $60M, so OpenAI is likely paying ~$70M per year

Fun fact (or at least fun for Sam Altman): his Reddit stake is worth around $2.4 billion.

⚖️ The first big AI copyright ruling is in—and it’s bad news for AI companies.

Thomson Reuters just won a landmark case in the U.S., setting a precedent that could reshape copyright law.

This is an important one, so let’ break it down:

What Happened?

Ross Intelligence trained its AI on Westlaw’s legal summaries to build a competing research tool—without permission or a licensing deal.

Thomson Reuters sued for copyright infringement.

While the tool never showed Westlaw’s summaries directly to users, it still used them as training data.

Note: This case involved non-generative AI.

---

What Did the Court Decide?

A U.S. court ruled against Ross, finding that fair use didn’t apply because:

1️⃣ The AI training wasn’t “transformative”: For fair use to apply, Ross’s AI needed to create something fundamentally different from Westlaw’s legal summaries. Instead, it used them for the same purpose: helping lawyers with legal research.

2️⃣ Ross’s product directly competed with Westlaw: It didn’t just use Westlaw’s content for internal research. It built a rival legal research tool, which hurt Westlaw’s market position.

The evidence of copying was so blatant that the judge said no jury would see it differently.

---

Why This Case Matters

This ruling sets a key precedent that could make it harder for AI companies to claim fair use.

Courts may now decide that using copyrighted material for training—even without displaying it—is still infringement.

If this decision holds, AI firms may have no choice but to start licensing data—or face a wave of lawsuits.

⚖️⚖️ Another day, another AI copyright battle.

Condé Nast, The Atlantic, The Guardian, and other major publishers are suing AI startup Cohere for training on their content without permission or payment, and displaying full articles instead of linking back.

AI isn’t replacing humans. It’s just making customer service more human.

😯 Turns out Allstate’s customer emails are more empathetic when written by AI.

The company now relies on OpenAI’s GPT models—grounded in company-specific terminology—to draft nearly all claim-related messages.

They’re more clear, less jargony and accusatory, and, ironically, more human than the ones written by people.

Google just added its Veo 2 AI video generator to YouTube Shorts, letting creators turn text prompts into high-quality videos. It’s launching first in the U.S., Canada, Australia, and New Zealand, with plans to expand

The 2023 animated short film Critterz, originally created with OpenAI’s DALL·E 2, has been remastered using Sora—showing just how far AI filmmaking has come in two years.

Check out the side-by-side below.

🚩 What’s more dangerous than an AI that’s wrong?

An AI that’s wrong—and confident about it. (Sound familiar?)

Researchers are racing to find solutions:

Teaching AI to say “I don’t know”: Training models to admit uncertainty.

RAG (Retrieval Augmented Generation): Connecting AI to real-time data instead of relying on memory.

System Prompts (like Claude): Instructing AI to flag answers that might be unreliable.

Because even the smartest people I know admit when they’re not sure.

That’s what makes them the smartest. 😆

⚠️ Is AI making us dumb—or just lazy?

A Microsoft study shows that as we rely more on AI, we shift from critical thinking to just checking if AI’s output is “good enough” instead of carefully analyzing and evaluating the results.

Also, the more we trust AI, the more we depend on its responses over our own judgment.

So, when it gets it wrong, we’re often too out of practice to catch the mistakes—or solve the problem ourselves.

I’d think about this more, but, you know… 😆

Betrayals. Power plays. Billion-dollar deals.

It’s not The Real Housewives—it’s Silicon Valley’s messiest feud.

The New York Times calls it: “How Sam Altman Sidestepped Elon Musk to Win Over Donald Trump.”

I call it “The Housewives of Silicon Valley: White House Edition.” 🍸

The next plot turn?

Elon Musk has made a $97.4 billion bid to take control of OpenAI’s nonprofit arm, claiming it has strayed from its safety-first mission.

To which Sam responded:

He then took it up a notch in a Bloomberg interview saying: “Probably his whole life is from a position of insecurity. I feel for the guy…I don’t think he’s a happy person.”

boys, boys….

This would be more fun to watch if it weren’t ultimately about who’s steering the most powerful—and potentially dangerous—technology of our time.

Turns out, even Formula 1 can’t outrun AI industry drama.

AI search startup Perplexity tried to sponsor Red Bull Racing with a $5M deal. Oracle, Red Bull’s biggest sponsor, blocked it.

Why?

🥊 They’re both bidding to buy TikTok.

🥊🥊Oracle funds Stargate, a massive OpenAI project which competes with Perplexity.

AI ads dominated the Super Bowl—but most of them fell flat.

ChatGPT’s spot was too abstract, self-important, and lacked emotionality.

And from a brand strategy perspective, it failed to answer the most critical question: Why should I care?

The most interesting thing about the spot? It was designed to work as vertical video too.

I gave an 8-minute AI talk during my annual exam. To my gynecologist. 😁

She’s signing up for a workshop.

Is this update newsletter appropriate?

Well, it is AI related and one of the highlights of my week.

Plus, I have to break up the more boring sections somehow.

YouTube expands access to its AI-powered auto-dubbing feature, which translates a speaker’s voice across multiple languages.

OpenAI has partnered with Schibsted Media Group to bring real-time news content from Nordic outlets like VG, Aftenposten, and Aftonbladet into ChatGPT.

Adobe introduced what it calls the first “commercially safe” video generation model, trained exclusively on licensed content—without using any customer data.

Christie’s is hosting its first AI art auction, and not everyone is happy about it.

Capital One launched an AI chatbot to help customers research and buy cars. It helps with everything from researching options and scheduling test drives, to exploring financing options.

In case you missed last week’s edition, you can find it 👇:

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 20+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).