I’m coming to you a few hours earlier today because I’m taking my mom to her doctor’s appointment this morning.

Yes, all is fine—but she doesn’t always ask all the right questions. You know how it goes…

By the way, this is my mom 👇, and she’s adorable.

Ok, back to AI because it’s been one wild week.

Tech stocks fell. AI giants like Meta, OpenAI and Nvidia faced hard questions about their future. The entire AI industry was suddenly on edge.

And it was all because of a little-known Chinese AI start-up called DeepSeek.

DeepSeek has owned the news cycle for the past week, inspired competition in Chinese AI companies, and fear in their U.S. counterparts.

This story has so many layers and moving parts. It took me way too many hours to make sense of it all—and let’s just say, not everyone has. There are far too many rushed and half-baked takes out there. 🫢

So, I’m dedicating most of this week’s newsletter to explaining what really happened, why it matters, and what’s coming next.

If you’re here for my usual updates, you can find them below this main story (clickable links appear in orange in emails and underlined in the Substack app):

Ok, let’s dive in.

Last week, I told you about DeepSeek’s new R1 reasoning model, which rivals (and in some cases beats) OpenAI’s o1 on certain AI benchmarks including math, coding and complex reasoning tasks—all at a fraction (approximately 5%) of the cost.

That alone is remarkable. But it gets better.

The model is also open-source, which means its structure and code are publicly accessible for anyone to study, use, copy and improve.

Researchers, developers, startups and even competitors can build their own products using the same tech, which will speed up AI advancements and give smaller players a better chance to compete—and even disrupt the big boys.

It’s also free for consumers to use.

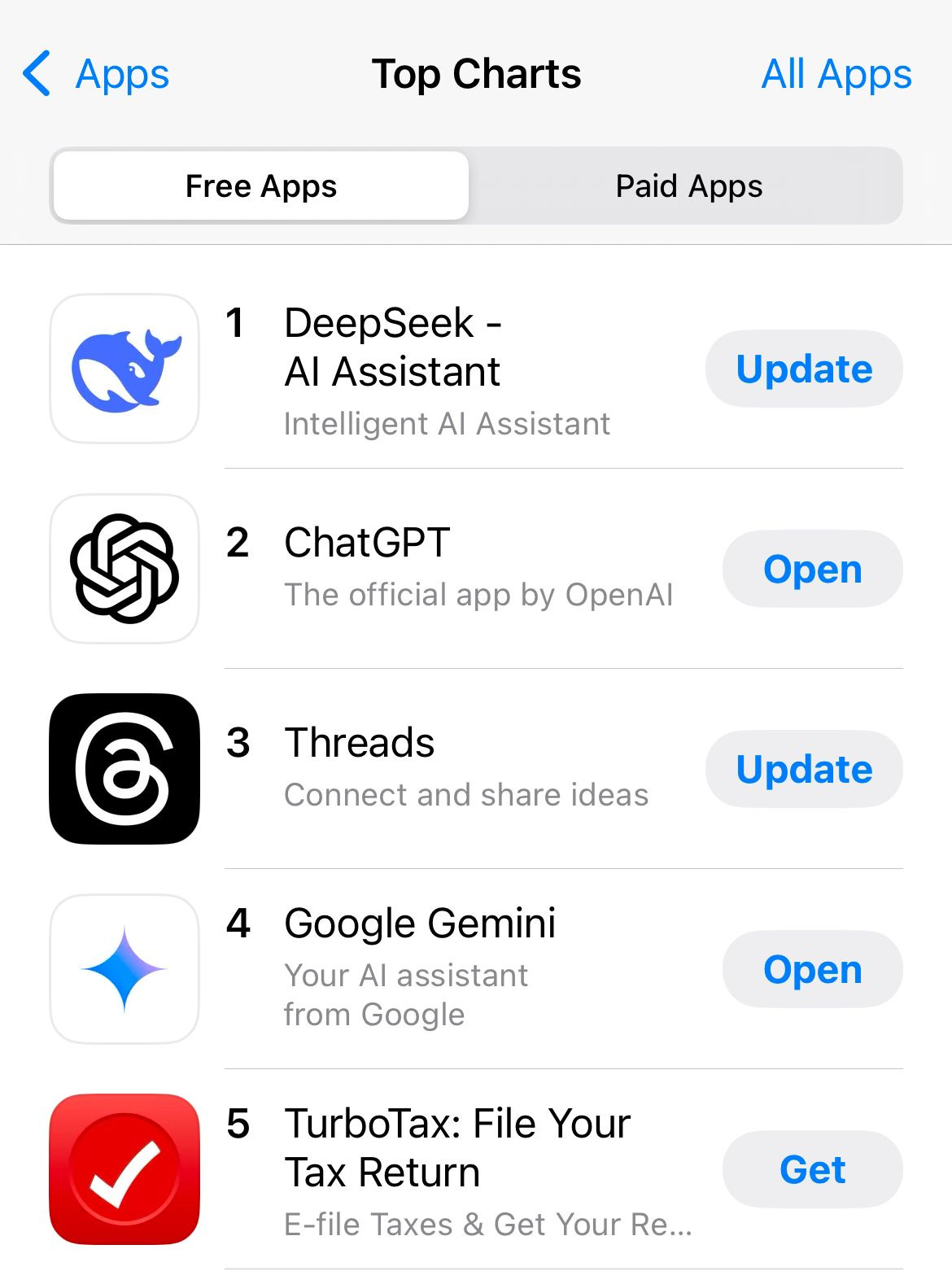

As of last weekend, DeepSeek had overtaken ChatGPT as the top free app in the app store.

I’ve been using it all week, and it’s impressive. There’s something fun and a little wild about it—an edge most AI models don’t have.

But there are two big caveats to be aware of:

⚠️ Censorship Issues

DeepSeek refuses to answer sensitive questions about China. Ask about Taiwan, democracy, or Tiananmen Square, and it either dodges or redirects.

When I asked if Taiwan was a country it responded with: "I’m not sure how to approach this type of question yet."

Yet? 🤨

Meanwhile, it answered just fine when I asked about Italy or Greece.

⚠️⚠️ Privacy Concerns

According to its privacy policy, DeepSeek collects user data like device info, keystroke patterns, IP addresses, and chat history—and stores it on servers in China.

To make matters worse, researchers found that DeepSeek had left sensitive user data and chat logs exposed online, raising even bigger questions about its security practices.

So, if you decide to experiment with it (and you should), think twice before entering anything personal or sensitive, and know that you might run into some unexpected and filtered answers.

—

What’s especially surprising is that DeepSeek pulled this off despite U.S. export controls blocking their access to the most advanced AI chips.

They had to get scrappy, relying on new and clever techniques to achieve high performance with fewer and lower quality resources.

Even more shocking is how quickly the company replicated OpenAI’s achievements—within months instead of year-plus gaps we usually see between major advances.

Big Bets, Big Money, Big Questions

On Monday, stocks for major tech firms—including Nvidia, Google, and Microsoft—took a big hit.

Why?

DeepSeek just proved that building top-tier AI doesn’t have to cost billions. That realization hit hard—especially for investors who bet big on Big Tech’s deep pockets as a competitive edge.

The company’s speed in replicating OpenAI’s achievements also highlights a harsh truth: No technical advantage is safe for long—even when companies try to keep their methods secret.

All of this raised many questions for investors:

Is Big Tech’s “spend more, win more” strategy still valid?

If the best AI models don’t require trillion-dollar budgets, what justifies Silicon Valley’s sky-high valuations?

If new companies can replicate cutting-edge models faster and cheaper, what happens to the firms spending trillions on compute and data centers?

Did U.S. AI labs rely too much on expensive chips and compute power instead of exploring smarter and more resourceful approaches?

If Chinese companies can keep up with top models despite export controls, can the biggest US players maintain their lead and competitive edge?

Even Meta, which has branded itself as a champion of open- source models, seemed to be caught off guard. The company reportedly organized “war rooms” to reverse-engineer R1’s approach and might use the same techniques to revamp their own models.

Cost-Effective, But Can It Scale?

At first glance, DeepSeek’s success seems like a direct challenge to companies like OpenAI and Anthropic, which have poured billions into training their closed AI models.

But building a great model is one thing—scaling it to reliably handle rising demand is another.

Training costs may drop, but running AI requires massive compute power.

And while open-source models level the playing field, scaling depends on infrastructure—and that’s where the giants have the edge.

DeepSeek’s more efficient training doesn’t necessarily cut overall compute demand once those users come flooding in. Case in point: they had to freeze app registrations this week because they couldn’t keep pace with demand.

Fewer resources and smaller infrastructure than US giants will limit DeepSeek’s ability to scale and keep its momentum.

So, Who Wins?

As top models become commoditized, offering only marginal performance differences, the winners will be the companies with the most compute power and infrastructure muscle—like OpenAI, Meta, Microsoft and Google.

These companies have the data centers, chips, and scale needed to handle rising demand.

This also means value shifts from owning the technology to owning the user experience, where ChatGPT has created the most value to date.

OpenAI will continue to build new models but that 300M monthly active user base, brand trust, and an ecosystem of tools they've built is much harder to replicate.

And the company isn’t slowing down. Their next reasoning model, o3—rumored to be far more powerful than o1 (or R1)—is expected to launch in the coming weeks. If it delivers, it could raise the bar yet again.

One Last Piece of the Puzzle

A key yet overlooked point: We don’t know how cost-efficient OpenAI actually is. DeepSeek had to stretch its resources because of its constraints.

But for all we know, OpenAI has been using similar optimization techniques this whole time. We just can’t see it.

The Takeaway

DeepSeek revealed Silicon Valley’s blind spots. Cheap, brilliant models are here—but victory goes to those who control the chips, data centers, and scale.

For now, the giants aren’t out—they’re adapting.

So, what does all of this mean for us?

AI products and tools are about to get way smarter, faster, and cheaper.

This will push individual AI adoption even further ahead of employers and companies. 👀

The future is early.

And we’re about to find out what happens when AI moves faster than anyone is ready for.

--

For a deeper dive, I highly recommend this piece from The Verge. And if you’re extra curious and a little nerdy like me, this DeepSeek FAQ from Ben Thompson.

⚠️ Meta AI will now use Facebook and Instagram data—including your home location and recently viewed content—to personalize its responses.

And there’s no way to opt out. 👀

It will also “remember” details from past chats.

For Meta, “personalization” is just a fancier way of saying, “Your data is ours.”

The U.S. Copyright Office says AI-assisted work—like de-aging actors, removing objects or enhancing post-production—is still protected by copyright.

But fully AI-generated content, like text-to-image art, is not.

The key difference? A human must substantially contribute to or edit the work for it to qualify.

Why? They argue that:

You can tell AI what you want, but you can't control how it interprets your request.

Even if you spend hours crafting and refining a prompt, the AI might still give you something totally different from what you intended.

That argument makes sense for now. But as AI tools advance and give creators more control, precision and influence over their outputs, copyright law will need to evolve.

And in the case of writing, I have no idea how they can possibly enforce this since AI detectors don't work.

Plus, anyone who has spent time crafting highly specific, nuanced prompts knows that prompting itself is an art form—one that reflects the creator’s vision, intent, perspective, and taste..

And in the age of AI-powered creative tools, taste is the new currency.

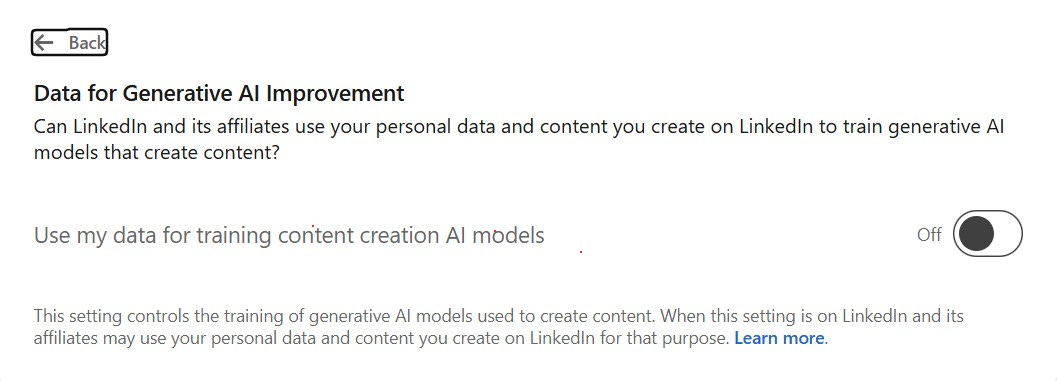

.🚨 LinkedIn has been using your personal data for AI training

This includes your profile information, posts, and anything else you share on the platform.

The setting is turned ON by default, giving "LinkedIn and its affiliates" permission to use your data to train generative AI models that create content.

The good news? You can easily turn it off.

📌 To opt out (which I recommend), go to:

Settings and Privacy > Data Privacy > Data for Generative AI Improvement (OFF)

Or click here and turn the toggle to “off”.

LinkedIn is also being sued for allegedly using premium subscribers’ private messages to train third-party AI without their consent.

🏛️ OpenAI Wins Over Uncle Sam

OpenAI has announced ChatGPT Gov, a secure, locally hosted version of ChatGPT designed specifically for U.S. government agencies to keep their data in-house. It:

Supports serious security frameworks

Includes all ChatGPT Enterprise features

Allows agencies control over their own security and compliance

🌶️ But here is my Hot Take:

ChatGPT Gov is a branding play dressed as a product launch. It’s ALL about optics.

OpenAI is betting that trust is the new AI currency. (They’re right)

By securing the U.S. government as a client, OpenAI:

✅ Gets a massive credibility boost

✅ Positions itself as the safe, trusted, and “vetted” AI provider

✅ Creates the ultimate enterprise sales flex

Next time a big potential enterprise client asks “Is your AI safe?”, they can just say, “The White House is using it. Your call.”

Dario Amodei, CEO of Anthropic, the company behind Claude, predicted this week that AI systems could surpass almost all human capabilities by 2027.

Yes, in just two years.

He also said this will require a major rethinking of how our economy and society are organized.

In case you missed last week’s edition, you can find it 👇:

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 15+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).

Really appreciate this recap - one of the clearest breakdown of the DeepSeek story I've seen! Watching how Big Tech adapts to this will be just as interesting as DeepSeek's next move!