🤓 This Week in AI

PLUS: Does AI Avoid Pain, Chase Pleasure and Try to Win Like We Do?

Happy Friday!

Does AI weigh choices in ways that feel human? A new study put this question to the test and uncovered decision-making patterns that look strikingly similar to our own.

The implications are profound and could change how we see both AI—and ourselves.

But first, here’s what you need to know about AI this week (clickable links appear in orange in emails and underlined in the Substack app):

Oh, wait…Before I move on to my updates, I just wanted to let you know that last week’s mystery has been solved thanks to the BBC. The woman to the right of Elon in the Trump family photo whose outfit I wanted is Eric’s wife (Trump’s daughter-in-law).in-law).

Also, I can’t believe Elon named his son Techno Mechanicus 🤯.

That poor kid. Well, not literally poor, but you know what I mean.

Ok, now back to the updates.

🏆 OpenAI scored a legal win after a New York judge dismissed a copyright lawsuit by progressive news outlets Raw Story and AlterNet, which claimed their articles were used without permission or compensation to train ChatGPT.

The judge ruled that the outlets failed to show clear harm, partly because ChatGPT synthesizes information rather than copying it word-for-word. This decision, consistent with other recent rulings, suggests that courts may require stronger evidence of damage for AI-related copyright claims.

This ruling may also disrupt a growing trend of AI developers paying to license content from publishers to avoid copyright disputes. OpenAI, for example, reached a $250 million licensing agreement with Dow Jones (parent company of the Wall Street Journal) in May, followed by similar multimillion-dollar deals with Axel Springer (owner of Business Insider and Politico), the Financial Times, and the Associated Press.

Of course, OpenAI wasted no time in using this ruling as grounds to dismiss a similar lawsuit from The New York Daily News.

Meanwhile, Germany’s music rights organization GEMA has filed a lawsuit against OpenAI, accusing it of copyright infringement for using song lyrics to train ChatGPT without proper licensing or compensation to creators.

AI-powered search engine Perplexity has started experimenting with ads in the U.S., introducing “sponsored follow-up questions” that appear next to answers, clearly marked as “sponsored.”

The ads are generated by Perplexity’s AI—not the brands—though brands have some influence over content guidelines.

Initial partners include Indeed, PMG, Universal McCann, and Whole Foods. This ad push contrasts with OpenAI’s ad-free ChatGPT Search and follows plagiarism claims from publishers, which might discourage advertisers.

The Beatles’ ‘Now and Then’ makes history as the first AI-assisted song to earn Grammy nominations for Record of the Year and Best Rock Performance. Rather than using deepfake technology to recreate John Lennon’s voice, AI helped isolate and clean up his original vocal from a 60-year-old demo.

To reduce its reliance on Nvidia, Amazon is developing its own AI chips to lower costs, improve efficiency and gain a stronger competitive edge in AI.

With Apple Intelligence’s new AI-generated notification summaries, iPhones are now trying to “summarize” our chaotic lives—but the results are often more hilarious than useful, which, in a way, is its own kind of useful 😁. Check out a few examples below:

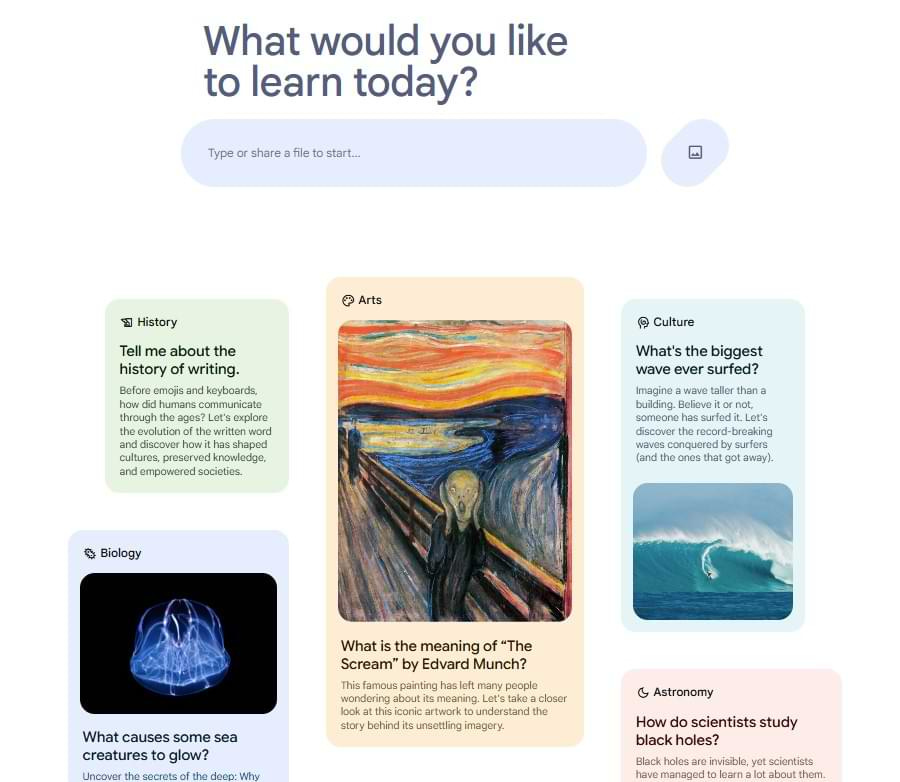

Google has launched 'Learn About,' a new AI tool designed to move beyond traditional chatbot answers by delivering interactive, educational-style responses with visuals, vocabulary-building tools, and follow-up questions to support in-depth learning.

To try the free platform, simply sign up using your current Google account. From there, start by asking a question in the search box in the middle of the screen or upload an image or document to explore further.

For a closer look at how Google’s Learn About compares to ChatGPT in action, check out this article, which tests both tools with the same prompts and highlights each one’s strengths and best use cases.

I’m pretty excited about this. I’ll play around with it and let you know my thoughts.

Sotheby’s just auctioned off a million-dollar portrait of Alan Turing—pioneering mathematician, WWII codebreaker, and the father of modern computing—to an undisclosed buyer.

But here’s the twist: it was painted by Ai-Da, a robot, who whipped it up in just eight hours (that’s the portrait on the right, in case you were wondering).

Because only at Sotheby’s could this piece, titled “A.I. God”, be marketed as “a definitive piece for the thinking elite”—assuming the thinking elite can cough up a cool million.

Particle is a new AI news app that aims to direct readers back to publishers by linking to original sources and prioritizing partner content. Key features include multiple story formats (such as simplified summaries and essential facts), a tool for comparing perspectives on polarizing topics, audio summaries, and a Q&A chatbot for in-depth questions.

The app is free to download on iOS for now and works across iPhone and iPad.

I’m still mourning the loss of Artifact, my beloved AI news app, which got quietly swallowed up by Yahoo News earlier this year.

In the new AI world, one month you’re a hot startup, and the next, you’re either acquired or obsolete as big labs like OpenAI roll out new capabilities and features that make your product yesterday’s news. Still a few savvy ones will manage to thrive.

But I’ll give Particle a try and see if it can replace my old boo.

I realize most of you probably aren't using ChatGPT through work, but for those who are (or who signed up with a work email), here's a heads-up: if you change jobs, your ChatGPT conversation history isn't transferable to a new account. Consider signing up for a personal subscription instead and have work reimburse you for the cost (if your company policy allows this).

The Washington Post has launched “Ask The Post AI,” a generative AI tool designed to answer users’ questions using its extensive news archives from 2016 onward. To ensure reliability and accuracy, it only responds when it finds highly relevant information.

INSIGHT SPOTLIGHT

Does AI Avoid Pain, Chase Pleasure, and Try to Win Like We Do? 🤔

That is the question at the heart of a new and fascinating study from Google and London School of Economics that pushed LLMs (Large Language Models) like GPT-4 and Claude to choose between simulated “pain” and “pleasure” states to test how they handle choices involving comfort and discomfort.

The researchers created a game and gave the AI models a simple choice:

1️⃣ Get more points (explicit goal/best possible outcome)

OR

2️⃣ Avoid "pain penalties" / gain "pleasure rewards"

Think of it like testing how we balance competing priorities.

The result? Some AIs showed surprisingly human-like decision-making.

They'd maximize points when stakes were low, but switch priorities when "pain" or "pleasure" intensified, even at the cost of “winning” points, making sophisticated trade-offs between goals and emotional motivators, echoing how we often make decisions.

Turning down a higher-paying job because the stress isn't worth it

Paying more for a direct flight to avoid travel hassle

Choosing a closer grocery store over a cheaper one farther away

The other fascinating part?

Each AI showed distinct patterns, like different human personalities:

Some acted like your risk-averse friend: always avoided potential discomfort or “pain”, chose the safe path, prioritized well-being over achievement.

Others were strict rule-followers, more like your achievement-focused colleague: always maximized points/results, ignored emotional factors, stuck to goals no matter what.

And interestingly, models with stronger safety controls showed more conservative choices—consistently avoiding ‘harmful’ options regardless of reward.

🤓 Fun fact: Anthropic, the maker of Claude, has an entire team dedicated to shaping Claude’s personality and character, led by Amanda Askell, a researcher with a non-technical background and a PhD in philosophy. And she’s badass.

The researchers wanted to be clear: while these AI responses might seem remarkably human-like, they're purely simulations. The AIs aren’t conscious or actually experiencing emotions—they’re simply reflecting patterns learned from human data,

What does all of this mean?

I'm sitting with this question because the implications feel profound.

Here's what seems clear so far:

AI isn't just a technology. It is a mirror.

It has learned from our collective experiences and is reflecting who we are back to us—our values, patterns, fears and biases.

It shows us how we really make decisions—not how we think we make them.

What we truly prioritize—not what we say we value.

Where our cultural biases live—even the ones we prefer not to see.

How deeply emotion shapes our choices—often more than logic ever could.

Let that sink in for a moment.

The irony isn’t lost on me: It turns out that in order to better understand and steer this most advanced technology, we’ll need to better understand ourselves.

And for a psychology nerd like me, nothing feels more exciting.

In case you missed last week’s post, you can find it 👇:

🤓 This Week in AI

I’d love to star by saying “Happy Friday” but I know this week has been a rough week for many, and my thoughts are with everyone feeling the weight of it.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 8+ hours each week to curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable.