🤓 Why ChatGPT's Not Working Like It Used To (and What To Do)

PLUS: This Week in AI

When OpenAI Launched GPT-5 last week, it didn’t just add a new model.

It removed all the old ones—including GPT-4o—without notice.

That broke a lot of people’s setups:

Prompts that had been crafted and optimized with surgical precision

GPTs built around specific model behavior

Even everyday ChatGPT responses felt different (and in some cases confusing) for everyone

For some users though, the loss felt more personal. They had come to rely on GPT-4o for its warmth and personality, often using it as a confidant or a therapist.

To make things worse, the auto-switcher (which is supposed to route queries to the best version of GPT-5) was broken, leading to wildly inconsistent and lower quality answers.

I wasn’t personally attached to 4o’s “personality,” but I had long, precise prompts and workflows built around it, so I felt it too.

Users weren’t happy. And the backlash was immediate.

The reaction also came with a clear realization: ChatGPT is now such a core part of how we work and live that even swapping out a model causes real disruption.

Over the weekend, OpenAI acknowledged the issues.

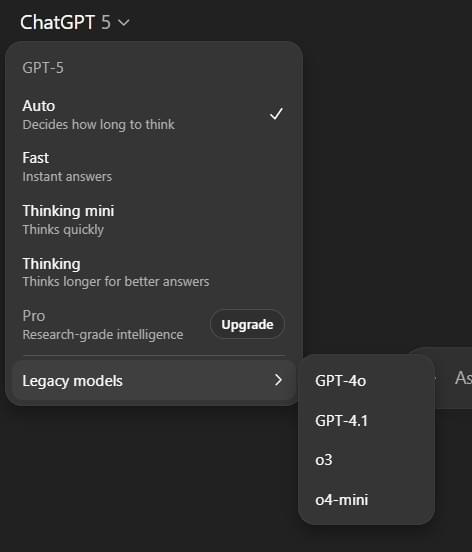

The company has now restored GPT-4o, o3, GPT-4.1 and o4-mini for all paid users (sorry, free tier folks).

And OpenAI CEO Sam Altman said that if they ever plan to phase out a model in the future, they’ll give users plenty of advance notice.

They also simplified the GPT-5 modes and raised the Thinking limit from 200 to 3,000 queries per week for the Plus tier.

But the older models don’t show up by default.

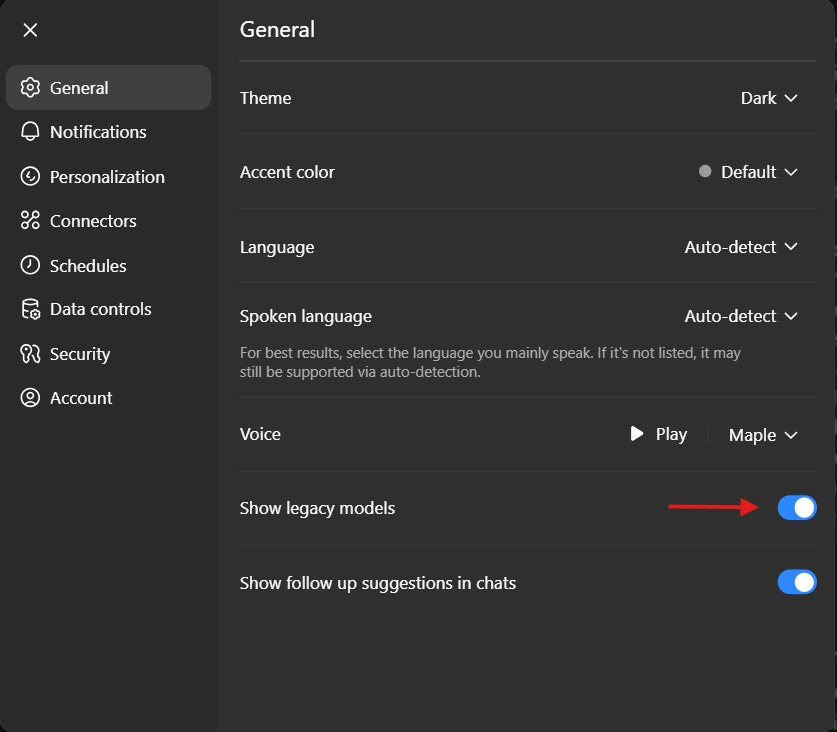

👉 Here’s how to turn them back on (Plus users):

Go to Settings → General → Toggle on “Show legacy models”.

You’ll now see both GPT-4o and o3 under “Legacy models” in the extended model picker.

With every new model, I usually need at least a few weeks to get a feel for how to work with it. I’ve only had about 30+ hours with GPT-5 so far—nowhere near enough time to fully stress-test it.

👉 Here is my top take-away so far:

GPT-5 is smart. Like really smart.

It’s especially good at complex planning and “tool use”—actually doing things for you, like finding a file on your computer, booking a table, searching the web, or buying something.

It’s also far better at what’s called “instruction following”, the ability to understand and follow more complex and detailed prompts.

This means the structure and exact wording of your prompts matters more now, and you’ll probably need to tweak and refine some of your existing ones to get the best results.

Just to be clear: the foundations of how to think and interact with AI haven’t changed. But like any new model, GPT-5 brings its own quirks, which take a while to figure out.

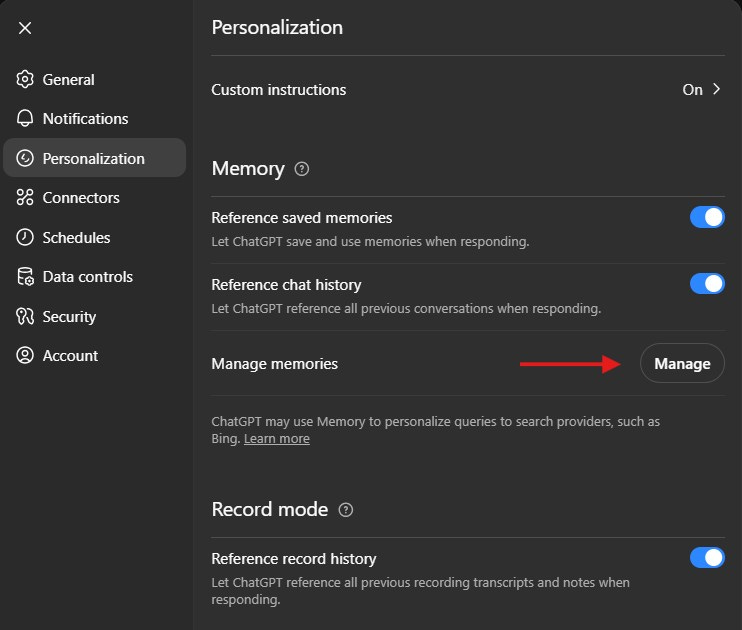

GPT-5’s sensitivity to instructions also means it’s more sensitive to the instructions included in your Custom Instructions and Saved Memories. So, make sure both are up to date before doing anything important.

👉 Here’s how to update them:

💠Custom Instructions (check out my deep dive here):

Go to Settings → Customize ChatGPT. Make sure your info and preferences are updated.

💠Saved Memories:

Go to Settings → Personalization. Click on “Manage Memory”. Delete anything you no longer want influencing replies.

I’m still figuring out how to improve my prompts for writing with GPT-5, and have made some progress. If I’m on deadline, 4o is still my safety net.

Once I have a better read on how to prompt this new model, I’ll share more—or maybe create a short GPT-5 playbook for paid subscribers.

👉 Until then, here is what I recommend:

💠Review and update your Custom Instructions and Memory.

GPT-5 is more sensitive to both, so small changes here can have a big impact.

💠Be more specific about your goals, desired tone, and format. Provide as much context as possible.

If you’ve been to one of my workshops, pull out the long cheat sheet. It’ll help you create stronger and more precise prompts.

💠For most everyday tasks, use the default “Auto” mode.

The router will choose the best model based on the needs your prompt. If it needs deeper reasoning, it’ll automatically shift you to “Thinking.”

💠If you were previously using GPT-4o, try “Auto” or “Fast.”

You may need to experiment to see which one feels best. Or access GPT-4o under “Legacy models.”

💠If you were using o3 ‘s reasoning capabilities, try “Thinking.”

It’s slower but handles complex tasks like analysis, strategy development, planning and decision making with more depth. Or access o3 under “Legacy models.”

I know that’s a lot to take in. If your head’s spinning and you’re not sure where to start, just stick with “Auto”—the router will (hopefully) get you where you need to go.

It’s been working really well for me for the past few days.

What You Need to Know About AI This Week ⚡

Clickable links appear underlined in emails and in orange in the Substack app.

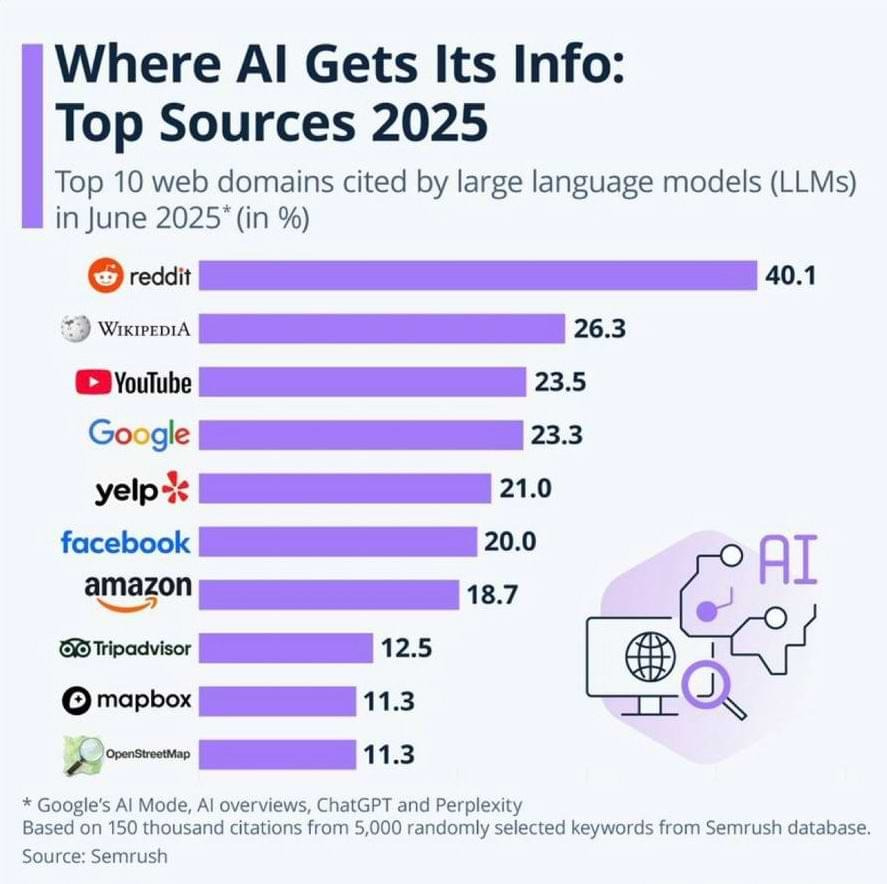

🤔Where is AI getting its answers?

New Semrush data shows the top sources AIs cite most often are Reddit, Wikipedia, and YouTube—with user-generated content leading by a wide margin.

That means fan threads, forum debates, and YouTube explainers are showing up before your official site, social posts, or press materials.

👉 If you’re in marketing or PR, make sure you’ve got clear strategies for the top UGC platforms shaping AI answers the most:

Reddit is now a visibility channel. OpenAI and Google both license Reddit content. If there’s a credible thread about your brand, there’s a good chance AI will see and cite it.

YouTube commands the most visual space. When YouTube links appear in ChatGPT or Google’s AI Overviews, they often show up as large, visual video cards mid-answer—crowding out other links. Well-titled explainers, reviews, and cast interviews are more likely to be featured.

Wikipedia shapes the baseline narrative. AI leans on Wikipedia for foundational info, but also uses it to frame context. Make sure your pages are accurate, well-cited, and up to date.

Elon Musk says X (Twitter) will put ads inside answers from Grok—its built‑in AI chatbot—so brands can buy sponsored suggestions at the moment of intent, a bid to revive X’s ad business.

Meanwhile, Google has been talking to advertisers about its plans to include ads in ‘AI Mode’ (its conversational AI search experience) search results.

The whole promise of AI search is that it can connect people with exactly what they’re looking for in a more direct and useful way. The moment they have to wonder if an answer was paid for, they stop trusting it.

As trust becomes even more fragile yet essential, OpenAI’s choice to keep ads out of its answers could become a major advantage.

Universal Pictures is adding “no AI training” warnings to its film credits, a strategic move that sets up future lawsuits against AI companies that use pirated copies as training data.

🪄 Hidden Door is an AI storytelling platform that lets fans create stories inside licensed and public-domain worlds—from The Wizard of Oz to Pride and Prejudice.

The platform works directly with authors and publishers, building rules that keep stories true to each world while sharing revenue with rights holders.

I haven’t tested it, so I can’t speak to the execution, but I love the concept. It feels both solid and inevitable.

There’s a long list of iconic IP and plenty of fans who would benefit from a version that actually delivers.

Letting fans co-create inside familiar worlds, with the blessing (and involvement) of rights holders, is exactly the kind of model that could shape where interactive storytelling goes next.

Traction will depend on execution.

Others, including big studios, will try.

Hopefully, a few hit it out of the park.

🥗 Uber Eats is using AI to improve menus: turning users’ photos into polished food images, pulling dish descriptions from reviews, and summarizing long review threads.

food photos are now generated from real user pics, dish descriptions are pulled from reviews, and long review threads get auto-summarized. Some users can even get paid to upload meal pics.

The goal: less scrolling, easier decisions, and faster orders.

Claude can now search through your previous conversations and reference them in new chats, so you can pick up from where you left off in another conversation.

This is basically memory across multiple conversations which means you don’t have to repeat context each time.

It’s rolling out to Max ($100-$200/month), Team, and Enterprise plans now, with other plans coming soon.

Trump’s Truth Social is partnering with Perplexity to bring AI search to its platform.

In case you missed last week’s edition, you can find it 👇:

🤓 GPT-5 Is Here. And It’s a Big Deal.

For AI super-nerds like me, yesterday felt a little like Christmas morning.

And if you want a better sense of how I use GPT-4o and o3, check out this post from two weeks ago. The concepts will help you to pick the right model for the right task.

🤓 Stop Using the Wrong ChatGPT Model: How to Pick the Right One

Most of us open ChatGPT, type a prompt, and never think about which model we’re using.

That's all for this week.

I’ll see you next Friday. Thoughts, feedback and questions are always welcome and much appreciated. Shoot me a note at avi@joinsavvyavi.com.

Stay curious,

Avi

💙💙💙 P.S. A huge thank you to my paid subscribers and those of you who share this newsletter with curious friends and coworkers. It takes me about 20+ hours each week to research, curate, simplify the complex, and write this newsletter. So, your support means the world to me, as it helps me make this process sustainable (almost 😄).

It was particulary bad on the first day when it launched. The router is doing a better job.

The one specific use case I'm having the most trouble with is writing. But I spent a few hours tweaking my longer prompts yesterday and will continue working on them and testing them this weekend.

We are going to have to keep iterating.

I'm glad it wasn't as disruptive for you!

I am pleased with 5 so far. I actually didn't find the shift that had but I'm also not a super user yet. Thanks for calling out the customizations. I'd forgotten to set that back up.